The next code is for loading some sample records. Note that

DimDate is loaded with

WideWorldImportersDW database as its date dimension table contains records for the current year. However, this can be loaded using a simple script as well.

Have a look on records related to

DimEmployee. Since it is a SCD Type II dimension, it holds history records. As you see, there are two records to

James Phil with validity period.

-- Inserting date records from AdventureWorks table

INSERT INTO dbo.DimDate

(DateKey, [Date], [Month], MonthNumber, QuarterName, QuarterNumber, [Year])

SELECT CONVERT(int, CONVERT(varchar(8), [Date], 112)), [Date], [Month], [Calendar Month Number]

, 'Quarter ' + CONVERT(varchar(2), DATEPART(Q, [Date])), DATEPART(Q, [Date]), [Calendar Year]

FROM WideWorldImportersDW.Dimension.Date;

-- Inserting records to Customer

INSERT INTO dbo.DimCustomer

(FullName, Town, SourceKey)

VALUES

('Jane Knight', ' Town 1', 100)

, ('Jack walter', 'Town 2', 101);

-- Inserting records to Product

INSERT INTO dbo.DimProduct

(Product, Brand, SourceKey)

VALUES

('Product 1', ' Brand 1', 22)

, ('Product 2', 'Brand 3', 23);

-- Inserting records to Customer

INSERT INTO dbo.DimEmployee

(Name, SalesTown, StartDate, EndDate, IsCurrent, SourceKey)

VALUES

('Peter Kevin', 'Town 1', '2016-06-01', null, 1, 200)

, ('James Phil', 'Town 2', '2016-06-01', '2016-06-30', 0, 201)

, ('James Phil', 'Town 3', '2016-06-30', null, 1, 201);

Next code is for creating the

Fact table. Generally,

Fact Table is a partitioned table. Therefore, this code creates a partition function and scheme for it. Note that, the best practice is, have different

File Groups for partitions but let's just use

PRIMARY (I will be discussing partition switching on fact loading with another post, extending the same code).

-- creating the partition

CREATE PARTITION FUNCTION pf_SalesDate (int)

AS RANGE RIGHT

FOR VALUES (20160101, 20160201, 20160301, 20160401, 20160501, 20160601, 20160701)

GO

-- creating the scheme

CREATE PARTITION SCHEME ps_SalesDate

AS PARTITION pf_SalesDate

TO ([PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY], [PRIMARY])

GO

CREATE TABLE FactSales

(

DateKey int NOT NULL INDEX IX_FactSales CLUSTERED,

ProductKey int NOT NULL,

CustomerKey int NOT NULL,

EmployeeKey int NOT NULL,

SalesAmount money NOT NULL,

SalesQuantity smallint NOT NULL

)

ON ps_SalesDate (DateKey)

GO

Let's insert some sample records into the

Fact Table too.

INSERT INTO FactSales (DateKey, ProductKey, CustomerKey, EmployeeKey, SalesAmount, SalesQuantity)

VALUES

(20160605, 1, 1, 1, 500, 2)

, (20160615, 2, 2, 1, 500, 2)

, (20160622, 2, 2, 2, 500, 2)

, (20160624, 2, 1, 1, 500, 2)

Done, now we have to create a SSIS package for loading new records. As you noted, we have records related to June 2016, and we will be loading records related to July 2016. Let's use following recordset for loading the

Fact Table.

SELECT CONVERT(date, '2016-07-01') As TransactionDate, 22 AS ProductId, 100 AS CustomerId, 200 AS EmployeeId, 500 AS SalesAmount, 2 AS SalesQuantity

UNION ALL

SELECT CONVERT(date, '2016-07-12') As TransactionDate, 23 AS ProductId, 101 AS CustomerId, 200 AS EmployeeId, 500 AS SalesAmount, 2 AS SalesQuantity

UNION ALL

SELECT CONVERT(date, '2016-07-14') As TransactionDate, 23 AS ProductId, 100 AS CustomerId, 201 AS EmployeeId, 500 AS SalesAmount, 2 AS SalesQuantity

UNION ALL

SELECT CONVERT(date, '2016-07-22') As TransactionDate, 22 AS ProductId, 100 AS CustomerId, 201 AS EmployeeId, 500 AS SalesAmount, 2 AS SalesQuantity

Let's create a SSIS package and add a connection to the data warehouse. I have a database named

Sales and will be using it as the source even though we do not extract records from the source. As you see, I have a

DataFlow Task in the

Control Flow.

Since we do not get surrogate key values from the source, we need to have

Lookups for dimension tables for getting surrogate key values. As you see with the image below, I have added

OLEDB Source to connect with

Sales and four of

Lookups for getting surrogate key values related to Date, Customer, Employee and Product.

Let's have a look on source first. It is configured with

Sales database and the query above is used for populating required records.

There are three

Type I type dimensions. Let's have a look on one and see how it needs to be checked. In order to get

DateKey from the

DimDate dimension, we need to look up

DimDate by using

TransactionDate that comes with the source. We need to configure the lookup to

DataWarehouse connection and select the lookup table as

DimDate. Once the connection is set, we need to link

TransactionDate coming from the source with

Date in the dimension. We need to select

DateKey as it is the one we need when we insert records to the

Fact Table. The selected

DateKey is added to the flow automatically.

The similar configuration is required for

DimCustomer and

DimProduct for getting surrogate key values. We need to link

CustomerID of the source to

SourceKey in the

DimCustomer table and get the

CustomerKey. We need to link

ProductID of the source to

SourceKey in the

DimProduct table and get the

ProductKey. However, getting

EmployeeKey is different as it is as SCD Type II dimension. Let's have a look on the configuration of

Employee Lookup.

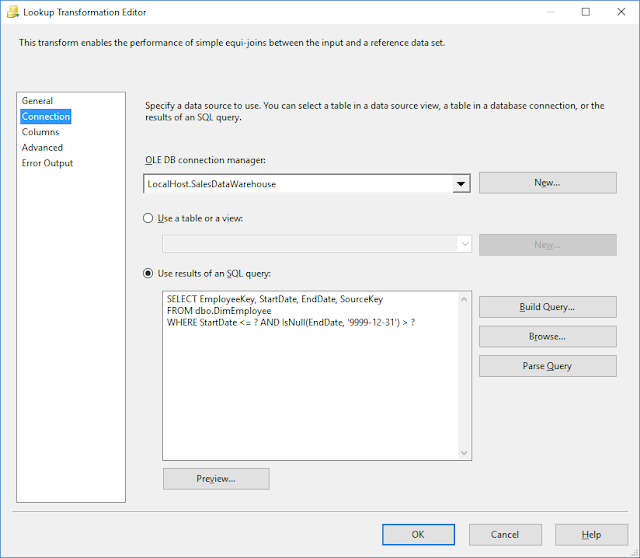

I will take you through the steps required for configuring this. First we need to make sure that

Cache mode is set to either

Partial Cache or

No Cache because we are going to parameterize the lookup query.

Next is setting up the connection. We need to connect it to

DataWarehouse and use a query for loading dimension records. Note the way it has been written. I have used question marks for parameterizing the query as we need to pick the right record related to the

TransactionDate.

Just like the other dimensions, we need to match two columns from the source and dimension to find the record. However, additional link is required for getting values for parameters, hence need to link

TransactionDate with

StartDate though that is not the exact link we need.

You will see the reason for joining

TransactionDate and

StartDate with next step. We need to adjust the lookup query now, need to do it with

Advanced section. It is required to adjust the query like below.

Once adjusted, need to set parameters like below. It allows us to select

TransactionDate as a parameter value because the above join. Note that parameters a

0-based indexed.

All done, finally it needs the destination. Set

OLEDB Destination for loading the

FactSales as the last step the of the

DataFlow task. Once all configurations are done, you can run the package and see how the

FactSales has been loaded.