Slowly Changing Dimensions are business entities in DW/BI systems that have attributes that do not change very often. In order to handle loading of SCDs, we use SQL Server Integration Services Data Flow component: Slowly Changing Dimension Component. It is a wizard that helps us to handle changing attributes.

If you are a SSIS developer and have used SCD wizard, you have already seen the bad performance on it. It works fine with a dataset that contains records less than something around 5000 (this is what I have seen, please do not consider it is as a benchmark) but gives very slow performance on beyond. Recently, I noticed that one of the projects done had used SCD wizard for almost all data loading and the time it takes for completing the load has gone from 5 minutes for 5-6 hours. It significantly increases the time when the data load is getting higher and higher.

How to improve the performance of loading of SCDs?

I had to give a solution for this…. two things came into my mind, one is Kimball Method SSIS Slowly Changing Dimension Component (will make a separate post on this) and the other is MERGE T-SQL statement. Although the MERGE implementation is bit complex, I thought to recommend the MERGE because many have accepted that the performance it gives is superb. The below table shows a comparison made on three different approaches on SCDs loading with some criteria (which is quoted from Matt Masson’s presentation named Performance Design Pattern).

You can easily notice that MERGE gives the best performance though there are drawbacks.

Type of Slowly Changing Dimensions

Slowly Changing Dimensions are categorized into three types named: Type 1, Type 2, and Type3. The Type 1 SCD does not maintain the history of changing attributes, it overwrites values of the attributes. Type 2 maintains historical values for changing attributes. Type 3 that we do not use much (I have not used it at all) maintains separate columns for changed attributes. SSIS SCD wizard supports both Type 1 and Type 2.

Using MERGE instead of SCD wizard

Replacement of SCD wizard with MERGE is not a straightforward technique. If the SCD has both Type 1 and Type 2 types attributes, they need to be handled separately. Let’s create a simple table with following set of data, in order to see the way of handling them with MERGE.

Following attributes in this table are Type 1:

Following attributes in this table are Type 1:

Following attributes are Type 2:

Following script creates the Dimension table and inserts data;

CREATE TABLE dbo.DimCustomer

(

CustomerKey int IDENTITY(1,1) PRIMARY KEY

, SourceCode char(5) NOT NULL

, FirstName varchar(50) NOT NULL -- Type 1

, LastName varchar(50) NOT NULL -- Type 1

, MaritalStatus char(1) NOT NULL -- Type 2

, Country varchar(50) NOT NULL -- Type 2

, StartDate date NOT NULL

, EndDate date NOT NULL

, CONSTRAINT IX_DimCustomer_SourceCode UNIQUE (SourceCode)

)

GO

INSERT INTO dbo.DimCustomer

(SourceCode, FirstName, LastName, MaritalStatus, Country

, StartDate, EndDate)

VALUES

('AAAA1', 'Dinesh', 'Priyankara', 'M', 'Sri Lanka'

, '01/01/2009', '12/31/9999')

, ('AAAA2', 'Yeshan', 'Senthush', 'S', 'Sri Lanka'

, '01/01/2009', '12/31/9999')

, ('AAAA3', 'Jane', 'Alvarez', 'S', 'France'

, '01/01/2009', '12/31/9999')

, ('AAAA4', 'Shannon', 'Carlson', 'M', 'India'

, '01/01/2009', '12/31/9999')

GO

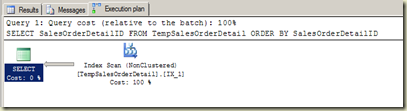

When data loading of SCDs are handled with SSIS,what we usually do is, once the data set is captured, we send it through SCD component. The SCD component inserts new data, updates Type 1 data, and finally inserts Type 2 data. If we are to use MERGE, we need to send the captured data to a temporary table and then use Execute SQL Task for executing the MERGE statement. Assume that following table is the temporary table and it is loaded with captured data.

This code creates the table and populates data;

CREATE TABLE dbo.TempDimCustomer

(

SourceCode char(5) NOT NULL

, FirstName varchar(50) NOT NULL

, LastName varchar(50) NOT NULL

, MaritalStatus char(1) NOT NULL

, Country varchar(50) NOT NULL

)

GO

INSERT INTO dbo.TempDimCustomer

(SourceCode, FirstName, LastName, MaritalStatus, Country)

VALUES

('AAAA1', 'Dinesh', 'Priyankara', 'M', 'Sri Lanka')

, ('AAAA2', 'Yeshan', 'Santhush', 'S', 'Sri Lanka')

, ('AAAA3', 'Jane', 'Alvarez', 'M', 'France')

, ('AAAA4', 'Shannon', 'Carlson', 'M', 'German')

, ('AAAA5', 'Jon', 'Yang', 'M', 'Japan')

GO

Handling Type 1 attributes

The below codes shows the MERGE statement that updates Type 1 attributes. Note that it goes through all records and updates.

MERGE INTO dbo.DimCustomer c

USING dbo.TempDimCustomer tc

ON (c.SourceCode = tc.SourceCode)

WHEN MATCHED

AND

(c.FirstName != tc.FirstName

OR c.LastName != tc.LastName)

THEN UPDATE

SET c.FirstName = tc.FirstName

, c.LastName = tc.LastName;

Handling Type 2 attributes

Handling Type 2 is tricky. If a record is changed, we need to update the old record, setting the EndDate as current date, and then insert a new record for it, with StartDate as current date. See the code below;

INSERT INTO DimCustomer

(SourceCode, FirstName, LastName, MaritalStatus, Country

, StartDate, EndDate)

SELECT Modified.SourceCode, Modified.FirstName

, Modified.LastName, Modified.MaritalStatus

, Modified.Country, Modified.StartDate

, Modified.EndDate

FROM

(MERGE INTO dbo.DimCustomer c

USING dbo.TempDImCustomer tc

ON (c.SourceCode = tc.SourceCode)

WHEN NOT MATCHED

THEN INSERT

(SourceCode, FirstName, LastName, MaritalStatus, Country

, StartDate, EndDate)

VALUES

(tc.SourceCode, tc.FirstName, tc.LastName, tc.MaritalStatus, tc.Country

, GetDate(), '12/31/9999')

WHEN MATCHED

AND c.EndDate = '12/31/9999'

AND (c.MaritalStatus != tc.MaritalStatus

OR c.Country != tc.Country)

THEN UPDATE

SET c.EndDate = getDate()

OUTPUT

$Action Action, tc.SourceCode, tc.FirstName, tc.LastName

, tc.MaritalStatus, tc.Country

, '10/28/2010' StartDate, '12/31/9999' EndDate) AS Modified

WHERE Modified.Action = 'UPDATE';

See the inner MERGE first. With first NOT MATCHED section, it inserts new records. Next is for finding MACTHED and Type 2 changes. Note that the check is done only on latest records (EndDate = ‘12/31/9999’). If found, EndDate is updated and records are returned as a OUTPUT of the MERGE. This output is captured by outer INSERT statement and inserts them as new records. Done!

Please do test both approaches before deciding the technique. If the data set is large, it would be better to use MERGE instead of SSIS SCD component.