One of the sessions we delivered with SQL Server Sri Lanka User Group Meeting – October 2013 was related to SQL Server 2014: In-Memory OLTP. I am sure that everyone was thrilled seeing, inserting millions of records into SQL Server table within seconds. Initially it amazed me too :), I believe that this is the key for 2014, just like Columnstore in 2012.

Here is the demo code I used with the session.

During the demonstration, I showed two scenarios based on three tables;

-

Normal disk-based table

-

In-Memory Durable table

-

In-Memory non-durable table.

Once scenario is inserting records from another table. Other is inserting another set with a stored procedure. Before going through the code, let’s understand In-Memory OLTP.

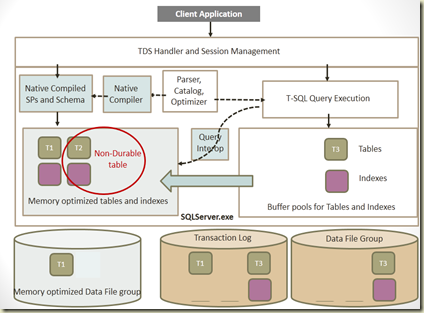

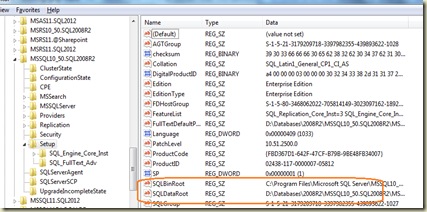

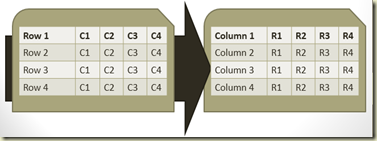

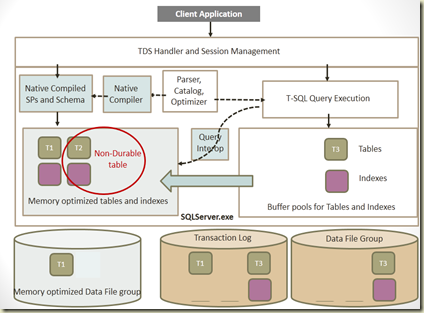

In-Memory OLTP is a database engine component which is optimized for accessing memory-resident tables. This was started 4 years ago with codename “Hekaton”. It is mainly for OLTP scenario and it significantly improves the performance of OLTP workloads. Here are some of key points on it;

-

Currently available with SQL Server 2014 - 64bit CTP1,

CTP2.

-

Tables can be created in memory as fully Durable or Non-Durable.

-

Content of durable tables will not be lost in case of server crash.

-

Non-durable tables are fully in memory. Content will be lost at server crash (including service restart).

-

Indexes created for in-memory tables are in memory only.

-

T-SQL can be written for accessing both in-memory tables and disk tables.

-

In-memory tables are fully supported for ACID.

-

Stored procedures written for accessing only in-memory tables can be made as natively compiled procedures.

Let’s see the code. I have already created a DB called TestDatabase01 and it has a table called dbo.FactSales. This table is from ContosoRetailDW which is available at: http://www.microsoft.com/en-us/download/details.aspx?displaylang=en&id=18279.

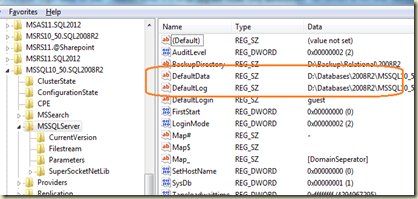

First step is enable the database for in-memory tables. It is done by adding a file group specifically for in-memory tables and add a file to it.

ALTER DATABASE [TestDatabase01] ADD FILEGROUP FG_MemoryOptiimizedData

CONTAINS MEMORY_OPTIMIZED_DATA

GO

ALTER DATABASE TestDatabase01 ADD FILE

(NAME = 'TestDatabase01_MemoryOptiimizedData'

, FILENAME = 'C:\Program Files\Microsoft SQL Server\MSSQL11.MSSQLSERVER\MSSQL\DATA\TestDatabase01_MemoryOptiimizedData.ndf')

TO FILEGROUP FG_MemoryOptiimizedData

GO

Next step is creating tables. This code creates three tables as mentioned above.

-- creating a normal disk table

CREATE TABLE dbo.NewFactSales

(

SalesKey int NOT NULL PRIMARY KEY

, DateKey datetime NOT NULL

, channelKey int NOT NULL

, StoreKey int NOT NULL

, ProductKey int NOT NULL

, PromotionKey int NOT NULL

, CurrencyKey int NOT NULL

, UnitCost money NOT NULL

, UnitPrice money NOT NULL

, SalesQuantity int NOT NULL

, ReturnQuantity int NOT NULL

, ReturnAmount money NULL

, DiscountQuantity int NULL

, DiscountAmount money NULL

, TotalCost money NOT NULL

, SalesAmount money NOT NULL

, ETLLoadID int NULL

, LoadDate datetime NULL

, UpdateDate datetime NULL

)

GO

-- creating an in-memory table.

-- Option DURABILITY = SCHEMA_AND_DATA makes table durable

CREATE TABLE dbo.FactSales_MemoryOptimized_Durable

SalesKey int NOT NULL PRIMARY KEY NONCLUSTERED HASH WITH (BUCKET_COUNT = 3000000)

, DateKey datetime NOT NULL

, channelKey int NOT NULL

, StoreKey int NOT NULL

, ProductKey int NOT NULL

, PromotionKey int NOT NULL

, CurrencyKey int NOT NULL

, UnitCost money NOT NULL

, UnitPrice money NOT NULL

, SalesQuantity int NOT NULL

, ReturnQuantity int NOT NULL

, ReturnAmount money NULL

, DiscountQuantity int NULL

, DiscountAmount money NULL

, TotalCost money NOT NULL

, SalesAmount money NOT NULL

, ETLLoadID int NULL

, LoadDate datetime NULL

, UpdateDate datetime NULL

)

WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_AND_DATA)

GO

-- creating another in-memory table.

-- Option DURABILITY = SCHEMA_ONLY, this is non-durable

CREATE TABLE dbo.FactSales_MemoryOptimized_NonDurable

(

SalesKey int NOT NULL PRIMARY KEY NONCLUSTERED HASH WITH (BUCKET_COUNT = 3000000)

, DateKey datetime NOT NULL

, channelKey int NOT NULL

, StoreKey int NOT NULL

, ProductKey int NOT NULL

, PromotionKey int NOT NULL

, CurrencyKey int NOT NULL

, UnitCost money NOT NULL

, UnitPrice money NOT NULL

, SalesQuantity int NOT NULL

, ReturnQuantity int NOT NULL

, ReturnAmount money NULL

, DiscountQuantity int NULL

, DiscountAmount money NULL

, TotalCost money NOT NULL

, SalesAmount money NOT NULL

, ETLLoadID int NULL

, LoadDate datetime NULL

, UpdateDate datetime NULL

)

WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_ONLY)

GO

Let’s insert records. Insert three statements separately for seeing the difference.

-- Insert into disk table

INSERT INTO dbo.NewFactSales

(

SalesKey, DateKey, channelKey, StoreKey

, ProductKey, PromotionKey, CurrencyKey

, UnitCost, UnitPrice, SalesQuantity, ReturnQuantity

, ReturnAmount, DiscountQuantity, DiscountAmount

, TotalCost, SalesAmount, ETLLoadID, LoadDate, UpdateDate

)

SELECT

SalesKey, DateKey, channelKey, StoreKey

, ProductKey, PromotionKey, CurrencyKey

, UnitCost, UnitPrice, SalesQuantity, ReturnQuantity

, ReturnAmount, DiscountQuantity, DiscountAmount

, TotalCost, SalesAmount, ETLLoadID, LoadDate, UpdateDate

FROM dbo.FactSales

-- Insert into in-memory durable table

INSERT INTO dbo.FactSales_MemoryOptimized_Durable

(

SalesKey, DateKey, channelKey, StoreKey

, ProductKey, PromotionKey, CurrencyKey

, UnitCost, UnitPrice, SalesQuantity, ReturnQuantity

, ReturnAmount, DiscountQuantity, DiscountAmount

, TotalCost, SalesAmount, ETLLoadID, LoadDate, UpdateDate

)

SELECT

SalesKey, DateKey, channelKey, StoreKey

, ProductKey, PromotionKey, CurrencyKey

, UnitCost, UnitPrice, SalesQuantity, ReturnQuantity

, ReturnAmount, DiscountQuantity, DiscountAmount

, TotalCost, SalesAmount, ETLLoadID, LoadDate, UpdateDate

FROM dbo.FactSales

-- Insert into in-memory non-durable table

INSERT INTO dbo.FactSales_MemoryOptimized_NonDurable

(

SalesKey, DateKey, channelKey, StoreKey

, ProductKey, PromotionKey, CurrencyKey

, UnitCost, UnitPrice, SalesQuantity, ReturnQuantity

, ReturnAmount, DiscountQuantity, DiscountAmount

, TotalCost, SalesAmount, ETLLoadID, LoadDate, UpdateDate

)

SELECT

SalesKey, DateKey, channelKey, StoreKey

, ProductKey, PromotionKey, CurrencyKey

, UnitCost, UnitPrice, SalesQuantity, ReturnQuantity

, ReturnAmount, DiscountQuantity, DiscountAmount

, TotalCost, SalesAmount, ETLLoadID, LoadDate, UpdateDate

FROM dbo.FactSales

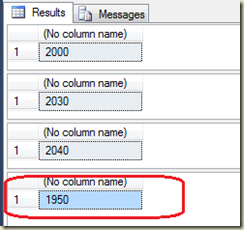

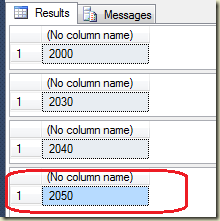

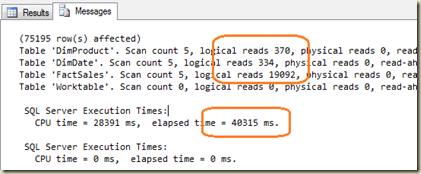

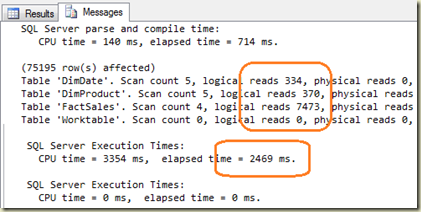

My VM is configured with 5.5GB memory. Three INSERT statements took 00:01:46, 00:00:17, and 00:00:09 respectively. As you see clearly, in-memory optimized tables give better performance than disk based tables. Let’s run the same with stored procedures. Below code adds three stored procedures. Note the second and third. Few options have been added to them, making them as natively compiled procedures. In addition to that, isolation level and language options are added too. Currently they are required for these procedures.

Note that current version requires manual statistics updates for in-memory tables.

UPDATE STATISTICS dbo.FactSales_MemoryOptimized_Durable WITH FULLSCAN, NORECOMPUTE

UPDATE STATISTICS dbo.FactSales_MemoryOptimized_NonDurable WITH FULLSCAN, NORECOMPUTE

Now let’s add procedures and run, and see the time each takes.

-- Procedure inserts records to disk table

CREATE PROCEDURE dbo.Insert_FactSales

AS

BEGIN

DECLARE @a int = 4000000

WHILE (@a < 7000000)

BEGIN

INSERT INTO dbo.NewFactSales

(SalesKey,DateKey,channelKey,StoreKey,ProductKey,PromotionKey,CurrencyKey,UnitCost

,UnitPrice,SalesQuantity,ReturnQuantity,ReturnAmount,DiscountQuantity,DiscountAmount

,TotalCost,SalesAmount,ETLLoadID,LoadDate,UpdateDate)

VALUES

(@a,'2013-01-01',1,1,1,1,1,1

,1,1,1,1,1,1

,1,1,1,'2013-01-01','2013-01-01')

SET @a = @a + 1

END

END

GO

-- Procedure inserts records to in-memory durable table

CREATE PROCEDURE dbo.Insert_FactSales_MemoryOptimized_Durable

WITH NATIVE_COMPILATION, SCHEMABINDING, EXECUTE AS OWNER

AS

BEGIN ATOMIC

WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'us_English')

DECLARE @a int = 4000000

WHILE (@a < 7000000)

BEGIN

INSERT INTO dbo.FactSales_MemoryOptimized_Durable

(SalesKey,DateKey,channelKey,StoreKey,ProductKey,PromotionKey,CurrencyKey,UnitCost

,UnitPrice,SalesQuantity,ReturnQuantity,ReturnAmount,DiscountQuantity,DiscountAmount

,TotalCost,SalesAmount,ETLLoadID,LoadDate,UpdateDate)

VALUES

(@a,'2013-01-01',1,1,1,1,1,1

,1,1,1,1,1,1

,1,1,1,'2013-01-01','2013-01-01')

SET @a = @a + 1

END

END

GO

-- Procedure inserts records to in-memory non-durable table

CREATE PROCEDURE dbo.Insert_FactSales_MemoryOptimized_NonDurable

WITH NATIVE_COMPILATION, SCHEMABINDING, EXECUTE AS OWNER

AS

BEGIN ATOMIC

WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'us_English')

DECLARE @a int = 4000000

WHILE (@a < 7000000)

BEGIN

INSERT INTO dbo.FactSales_MemoryOptimized_NonDurable

(SalesKey,DateKey,channelKey,StoreKey,ProductKey,PromotionKey,CurrencyKey,UnitCost

,UnitPrice,SalesQuantity,ReturnQuantity,ReturnAmount,DiscountQuantity,DiscountAmount

,TotalCost,SalesAmount,ETLLoadID,LoadDate,UpdateDate)

VALUES

(@a,'2013-01-01',1,1,1,1,1,1

,1,1,1,1,1,1

,1,1,1,'2013-01-01','2013-01-01')

SET @a = @a + 1

END

END

GO

EXEC dbo.Insert_FactSales

EXEC dbo.Insert_FactSales_MemoryOptimized_Durable

EXEC dbo.Insert_FactSales_MemoryOptimized_NonDurable

Again, natively compiled procedures against give better performance than others. Here is the summary;

| Insert 3 million records |

Disk table |

In-Memory table – Durable |

In-Memory table - NonDurable |

| INSERT statement |

00:01:46 |

00:00:17 |

00:00:09 |

| SP |

00:15:19 |

00:00:19

(Natively Compiled) |

00:00:03

(Natively Compiled) |

Expect more codes on this with future posts. Code related to this can be downloaded from: http://sdrv.ms/18sAxEa

The presentation used for the session can be downloaded from: http://sdrv.ms/1d2xSoe

SQL 2014 is still being developed. However, for testing purposes, you can download CTP 2 from here: http://technet.microsoft.com/en-us/evalcenter/dn205290.aspx

Here are useful links on this;