It is very rare to see a table with Heap Structure as it is not a common practice but we still find such tables with some implementations. When performing performance tuning on problematic tables or queries, the first check we have in the checklist is whether the query produces an odd number of IOs than it should show, hence, same is applied to a query written against a heap structure as well. What if it shows a significant higher number for IOs?

This can happen because of Forwarding Pointers. When a value of a column is updated that requires more space and if the Data Page has no space, the page is set with a new page or last page it finds and keeps a pointer in the original page. SQL Server does this because by doing like this, it does not need to update the RID (8byte – file number: page number: row number) of non-clustered indexes created. But if the table has many records and many similar updates, it creates many forwarding pointers and increases the IO for satisfying the request.

Let's check this with a simple code;

The following code creates a table and inserts set of records;

USE tempdb;

GO

CREATE TABLE dbo.TestTable

(

Id int identity(1,1)

, Name varchar(200) not null

);

GO

INSERT INTO dbo.TestTable

(Name) VALUES ('Name');

GO 1000000

This code checks the space. As you see, the table consumes 21800KB and if we divide it by 8, we can get the approximate number of pages it needs to hold records, which is 2725. The last statement shows the pages read.

-- This shows the space used for data is 21800 KB EXEC sp_spaceused N'dbo.TestTable', N'true'; -- If we devide by 8, it should show the approximate number of pages -- table should used for maintaining records. -- 21800 / 8 = 2725 -- And this proves that our calculation is correct SET STATISTICS IO ON SELECT COUNT(*) FROM dbo.TestTable; SET STATISTICS IO OFF

Now let's update the table by increasing the size of the second column. Since all pages are full with initial inserts, SQL Server moves updated records to new pages, adding pointers to the original location. If we check the same after the update, you will see that our calculation shows that it needs only 16630 pages but there are 489,382 page reads.

UPDATE dbo.TestTable

SET Name = REPLICATE('N', 200)

WHERE Id % 2 = 0;

-- This shows the space used for data is 133040 KB

EXEC sp_spaceused N'dbo.TestTable', N'true';

-- If we devide by 8, it should show the approximate number of pages

-- table should used for maintaining records.

-- 133040 / 8 = 16630

-- But we do not see the same now

SET STATISTICS IO ON

SELECT COUNT(*) FROM dbo.TestTable;

SET STATISTICS IO OFF

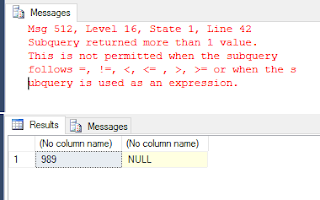

This will surely slow down the queries. How this has happened? It has happened because of forwarding pointers set. The following code shows you the number of forwarding pointers created by our update statements.

Have a look on the output, the 489,382 IOs is formed as Page Count + Forwarded Record Count which is 16630 + 472,752.

If you see a similar issue with your table, it is better to upgrade the table from Heap to Clustered structure. However, as a temporary measure, you can run the following code for fixing it.

ALTER TABLE dbo.TestTable REBUILD;