WIth my previous post (How to create a DocumentDB in Azure), I mentioned that there are multiple ways of loading documents into DocumentDB in Azure. This is the continuation of it, let's see how we can load data from SQL Server into DocumentDB using Data Migration Tool. Data Migration Tool is a free open-source tool that can be used for loading data into DocumentDB from many different sources. It converts loaded data into JSON hierarchical documents and supports loading data from Microsoft SQL Server, MongoDB, JSON, CSV, Azure Table Storage and many more.

The Data Migration Tool can be downloaded from Microsoft Download Center. However there is an easy way, link for the page is available with DocumentDB account blade;

Go to the page and download the compressed file. Once files are extracted, you will see two executable files; dt.exe and dtui.exe.

Click the dtui.exe for opening the tool. As usual, welcome screen appears, and next screen for selecting the source. Since we try to load data from SQL Server, select SQL under Import from. You need to manually add the connection string (you can get it generated by creating a UDL file), make sure it is added without the provider.

Let's try to load data from AdventureWorksDW2014 database. This is the query for loading customers with locations.

USE AdventureWorksDW2014; GO SELECT c.CustomerKey AS [key] , c.FirstName + ' ' + c.LastName AS [Name] , c.AddressLine1 AS [Location.Street] , g.City AS [Location.City] , g.StateProvinceName AS [Location.Province] FROM dbo.DimCustomer c INNER JOIN dbo.DimGeography g ON c.GeographyKey = g.GeographyKey;

Examine the query. Note the way how aliases have been set, specifically columns related to the address. If you need a hierarchy inside the document, this is the way of instructing the tool for making them. As per this query, a document will contain properties like key, name, and location, and location will have three more properties; Street, City and Province.

Once the query is placed, write dot (".") for Nesting Separator for instructing the tool that dot is used for separating nested properties.

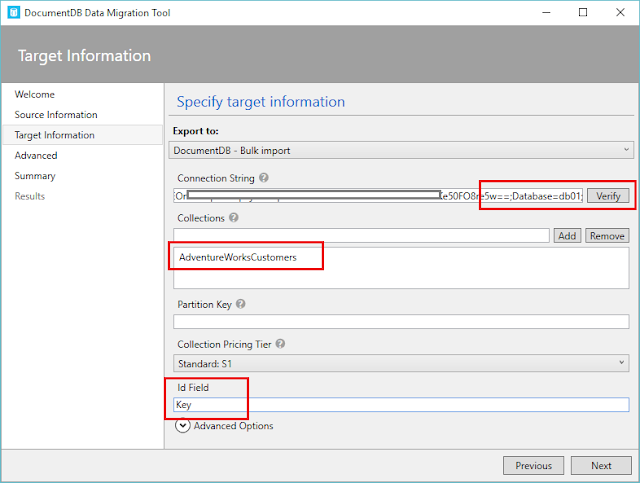

Export to

Next screen for setting the target. There are multiple options for Export to, since this loads only 18484 documents, we can use DocumentDB - Bulk Import rather selecting other items such as DocumentDB - Sequential record import and JSON file. This creates a stored procedure in the collection and uses for loading documents. It is recommended to use second option which is DocumentDB - Sequential record import for large set of documents because it is faster than bulk load. The third option JSON file does not load documents to DocumentDB but creates JSON files.

Connection string

Setting the connection string is easy. All we have to do is, get the copy of primary connection string from keys in documentdb account blade and add the database name to it. Note that database name has to be manually added.

Collection

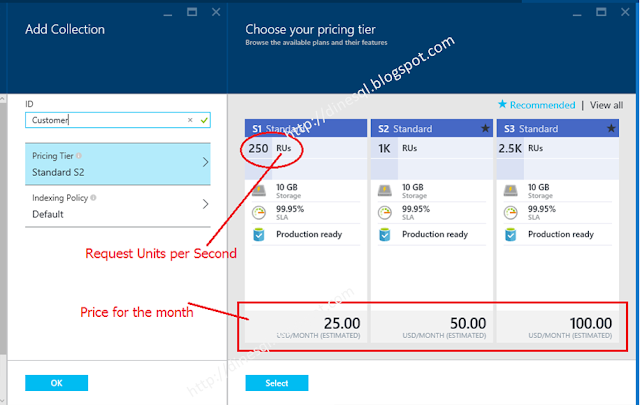

We can load documents to an existing collection or create a new one. If the one we added with the screen is not exist, tool will create a one for us with the selected performance tier.

Id field

If you leave the Id input box blank, it will create one for us, else we can instruct to use one of our columns for that. As you see, I instruct to use Key column for Id of the documents.

Indexing policy

We can add a policy that explains how we search documents and what type of index we prefer. We do not need to write it but can select the required index by right-clicking on it for getting the menu and selecting one. There are three options;

- Default - Efficient indexing only for numeric supporting equality and range check and sorting. String searches cause scan and no support on sorting.

- Hash - Support only scanning for both numeric and string, and no support on sorting.

- Range - Efficient indexing for both numeric and string including sorting but may use extra space and resources.

Now it is ready for loading, go through the summary and complete it.