USE tempdb CREATE TABLE dbo.Product ( ProductId int IDENTITY(1,1) NOT NULL, ProductName varchar(200) NOT NULL, Class char(1) NOT NULL, Cost money NOT NULL, PRIMARY KEY CLUSTERED (ProductId ASC) ) GO ALTER TABLE dbo.Product ADD CONSTRAINT CH_Product_Class CHECK ((Class='A' OR Class='a' OR Class='B' OR Class='b')) GO ALTER TABLE dbo.Product ADD CONSTRAINT CH_Product_Cost CHECK ((Cost>(0))) GO

Friday, November 25, 2016

SSIS OLE DB Destination sends all records to Error Output

Saturday, August 15, 2015

Finding user tasks that are waiting longer than expected time

/* Execute this in a new window Note that it updates a record within an explicit transaction */ USE AdventureWorks2014; GO BEGIN TRAN UPDATE Production.Product SET Color = 'Black' WHERE ProductID = 1; -- ROLLBACK TRAN /* Execute this in a new window This tries to retrieve the same */ USE AdventureWorks2014; GO SELECT * FROM Production.Product WHERE ProductID = 1; /* Execute this in a new window This looks for queries waiting longer than 20 seconds */ SELECT s.original_login_name , t.wait_type , t.wait_duration_ms, q.text FROM sys.dm_os_waiting_tasks AS t INNER JOIN sys.dm_exec_sessions AS s ON t.session_id = s.session_id INNER JOIN sys.dm_exec_requests r ON s.session_id = r.session_id CROSS APPLY sys.dm_exec_sql_text(r.sql_handle) q WHERE s.is_user_process = 1 AND t.wait_duration_ms > 20000; -- waiting more than 20 seconds

Saturday, October 5, 2013

What is Columnstore Index - SS SLUG Aug 2013 – Demo V

If you have worked with large databases (or data warehouses), you have already seen enough of scenarios where you do not get much benefits out of indexes, particularly with large data tables. SQL Server 2012 ColumnStore index structure was specifically introduced for addressing this issue.

This new structure is based on Microsoft Vertipaq technology and it goes as a non-clustered index. The different between this and traditional indexing is the storage. The indexes we have worked so far store data in a row-wise structure where as this stores data in a column-wise structure. Another different which make this unusable with OLTP databases is, structure becomes Read-Only.

How this exactly stores data was discussed in the user group meeting. Here are set of images that explains how SQL Server organizes data for ColumnStore indexes.

The first image shows a table that contains millions of records with hundreds of columns. If you execute a command for creating a ColumnStore non-clustered index, the first step of SQL Server is grouping records (image 2). Then it creates segments for each column in each group. Third image shows how 16 segments are created. SQL Server uses Dictionary Compression for compressing these segments. These segments will be stored in LOBs and become unit of transfer between the disk and the memory.

Let’s see how it can be used and the performance benefit. This sample code uses ContosoRetailDW and it can be downloaded at: http://www.microsoft.com/en-us/download/details.aspx?displaylang=en&id=18279.

Have a look on following query. The query accesses three tables (one is very large) and performs set of aggregations.

USE [ContosoRetailDW]

GO

SET STATISTICS IO ON

SET STATISTICS TIME ON

SELECT

p.ProductName

, d.CalendarYear

, d. CalendarMonth

, COUNT(*) TotalOrders

, SUM(f.SalesQuantity) Quantity

, SUM(SalesAmount) Amount

, SUM(CASE WHEN f.DateKey = '2009-12-31 00:00:00.000' THEN SalesAmount ELSE 0 END) AmountToday

FROM dbo.FactSales f

INNER JOIN dbo.DimDate d

ON d.Datekey = f.DateKey

INNER JOIN dbo.DimProduct p

ON p.ProductKey = f.ProductKey

GROUP BY

p.ProductName

, d.CalendarYear

, d. CalendarMonth

ORDER BY 1, 2, 3

SET STATISTICS IO OFF

SET STATISTICS TIME OFF

It has read around 19,000 pages and has taken 40 seconds.

Now, let’s create a ColumnStore index on the table. The recommended way is, include all columns when creating the index.

CREATE COLUMNSTORE INDEX IX_FactSales_CStore ON dbo.FactSales (

SalesKey, DateKey, channelKey, StoreKey, ProductKey, PromotionKey, CurrencyKey

, UnitCost, UnitPrice, SalesQuantity, ReturnQuantity, ReturnAmount, DiscountQuantity

, DiscountAmount, TotalCost, SalesAmount,ETLLoadID, LoadDate, UpdateDate)

This might takes minutes based on the number of records in the table. Once it is created, run the SELECT again and see the result.

As you see, number of pages that have been read is around 8,000 and time taken is 2 seconds. It is a significant improvement. However the biggest issue with this is, now table has become a read-only table. However this can be applied in partition level and partitions that contain history data can be benefited from this.

This link: http://technet.microsoft.com/en-us/library/gg492088.aspx gives all info regarding ColumnStore indexes. Have a look on it for understanding restrictions, limitations and scenarios you can use it.

Sunday, September 29, 2013

Understanding Indexed Views - SS SLUG Aug 2013 – Demo IV

Here is another post on a demo did at Aug meet-up. This post speaks about Indexed Views.

As the name says, views can be made as Indexed Views by adding indexes on them. If you are not familiar with this concept, a general question comes up; Views are not physical objects, SQL Server holds the definitions of them only, then how indexes are maintained with views? Here is the answer if you have the same questions; Once a clustered index is added to a view, it becomes a physical object, it becomes something similar to a table, it becomes an Indexed View.

What is the point of adding an index to a view? Can we improve the performance of a normal view that contains simple, single-table query? No, we cannot. This is not for such queries. This is typically for views that are formed with multiple tables and contains aggregations. Assume that you have a view that joins 2-3 tables with millions of records and then performs set of aggregations. Aggregations reduce number of records returned but it still needs to read all records, increasing IO operations. If all three tables are maintained with 30,000 pages, whenever the view is accessed, all 30,000 pages have to be read and then aggregations should be done. Making this view as an Indexed view, number of pages to be read is eliminated and aggregation is not required to be performed. This definitely improves the performance.

Here is the demo code shown with the presentation on it.

USE AdventureWorks

GO

-- create a view from Sales header and details

CREATE VIEW dbo.GetSales

AS

SELECT h.SalesOrderID, h.CustomerID, SUM(d.LineTotal) LineTotal

FROM Sales.SalesOrderHeader h

INNER JOIN Sales.SalesOrderDetail d

ON h.SalesOrderID = d.SalesOrderID

GROUP BY h.SalesOrderID, h.CustomerID

GO

-- View data from GetSales view

-- Note the number pages reading during data retrieval

SET STATISTICS IO ON

SELECT * FROM dbo.GetSales

SET STATISTICS IO OFF

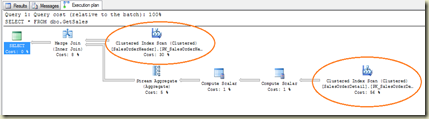

Look at the output and the plan;

Now let’s make the view as an Indexed view. There are many rules to be followed, key rules are listed below;

-

All columns in the view must be deterministic.

-

The SCHEMA_BINDING view option must be should when creating.

-

The clustered index must be created as unique.

-

Tables references in the view must be two-part naming.

-

The COUNT_BIG() function must be included if aggregation is used.

-

Some aggregations, such as AVG, MAX, MIN are disallowed in indexed views.

-- Making the view as INDEXED VIEW

ALTER VIEW dbo.GetSales

WITH SCHEMABINDING

AS

SELECT h.SalesOrderID

, h.CustomerID

, SUM(d.LineTotal) LineTotal

, COUNT_BIG(*) CountBig -- this is required

FROM Sales.SalesOrderHeader h

INNER JOIN Sales.SalesOrderDetail d

ON h.SalesOrderID = d.SalesOrderID

GROUP BY h.SalesOrderID, h.CustomerID

GO

-- This makes the view as INDEXED VIEW

CREATE UNIQUE CLUSTERED INDEX IX_GetSales ON dbo.GetSales (SalesOrderID, CustomerID)

GO

-- Get data from view and see, note the number of data pages read

-- This shows that view is now physically available and data is loaded from it

SET STATISTICS IO ON

SELECT SalesOrderID, CustomerID, LineTotal FROM dbo.GetSales

SET STATISTICS IO OFF

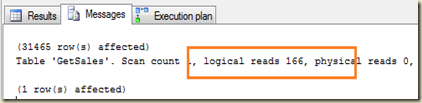

Note the pages accessed and the plan;

As you see, performance is significantly improved. However there is something needs to be considered when creating Indexed Views. That is the cost for maintaining it. Every changes make to underline tables require an update on the view. It is transparent but a cost is involved. Therefore implement this for tables that are not frequently updated.

Note that if you are using Standard Edition, you need to use NOEXPAND hint with SELECT statement like below. Otherwise SQL Server will not use the added index.

SELECT SalesOrderID, CustomerID, LineTotal FROM dbo.GetSales WITH (NOEXPAND )

Sunday, May 1, 2011

Object Name Resolution – SQL Server

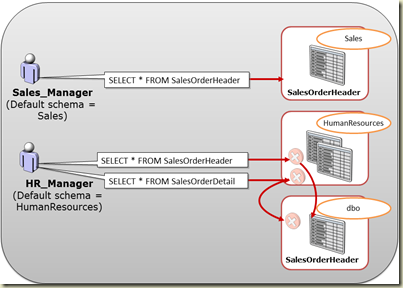

Understanding how SQL Server resolves objects’ names will be an effective remedy against performance issues. This name resolution happens if securables are not fully qualified. A statement like SELECT SalesOrderID FROM SalesOrderHeader will be a candidate for this. As you see, the SalesOrderHeader is not written as a fully qualified name, hence SQL Server tries to resolve it by adding Schema owned by user connected. If SQL Server cannot find an object like ConnectedUser’sSchema.SalesOrderHeader then it tries to find an object called dbo.SalesOrderHeader as the second step. Error is thrown, if it is not successful too. Here is an example for it;

USE AdventureWorks2008

GO

-- creating a new table with dbo schema

SELECT TOP (1) *

INTO SalesOrderHeader

FROM Sales.SalesOrderHeader

-- Create two logins for testing

CREATE LOGIN HR_Manager WITH PASSWORD = '123'

, CHECK_POLICY = OFF;

CREATE LOGIN Sales_Manager WITH PASSWORD = '123'

, CHECK_POLICY = OFF;

GO

-- Adding them as users with default schemas

CREATE USER HR_Manager WITH DEFAULT_SCHEMA = HumanResources

CREATE USER Sales_Manager WITH DEFAULT_SCHEMA = Sales

GO

-- Given users permission on data reading

sp_addrolemember 'db_datareader', 'HR_Manager';

sp_addrolemember 'db_datareader', 'Sales_Manager';

-- Execute as HT_Manager and see

EXECUTE AS USER = 'HR_Manager'

GO

-- This shows records from newly created

-- table, not from original table

SELECT * FROM SalesOrderHeader

-- This throws an error

SELECT * FROM SalesOrderDetail

REVERT

GO

-- Execute as Sales_Manager

EXECUTE AS USER = 'Sales_Manager'

GO

-- Both statements will work

SELECT * FROM SalesOrderHeader

SELECT * FROM SalesOrderDetail

REVERT

GO

As you see with the code, when HR_Manager executes SELECT statements, as the first step, names of them will be resolved as HumanResources.SalesOrderHeader and HumanResources.SalesOrderDetail. SQL Server does not find any objects and do the next step, resulting dbo.SalesOrderHeader and dbo.SalesOrderDetail. A match will be found for first one as we have created one with dbo schema but not for the second. In that case, second statement throws an error.

When Sales_Manager executes, SQL Server resolves objects’ names as Sales.SalesOrderHeader and Sales.SalesOrderDetail. In that case, both statements are successfully executed.

Even though Sales_Manager executes statements without any error, it would be always better to make securables as fully qualified objects, that helps SQL Server to quickly execute the code without performing an additional task.

Tuesday, August 24, 2010

Do you consider the “CONVERT_IMPLICIT”?

This is not just about implicit conversion. This is about implicit conversion that happens without our notice, that might hinder the performance. See the code below:

USE AdventureWorks2008

GO

DECLARE @FirstName varchar(50)

SET @FirstName = 'Gigi'

SELECT * FROM Person.Person

WHERE FirstName = @FirstName

No major issue. It returns data as we want. Enable Actual Execution Plan and run the code again. Do you see what I see?

SQL Server converts the value which is varchar into nvarchar which can be a costly operation in a certain situation. SQL Server does this because char and varchar have lower precedence than nchar and nvarchar.

This implicit can be simply overcome by making the variable as nvarchar. You can see the same when integer value is passed to a string type column that contains integer values.

Although this is a simple and not a major issue as we see, it can slow down your queries.