This is based on the presentation titled “SQL Brain Bashers” I did at SQL Sever Sri Lanka User Group Meeting. This discusses the way SQL Server interprets two-digit years to four-digit years.

Here is the question related to this demo;

You execute the following query. Note that the “years” of input values are sent as two-digit years.

DECLARE @datetime datetime

SET @datetime = '01/01/00'

SELECT YEAR (@datetime)

SET @datetime = '01/01/30'

SELECT YEAR (@datetime)

SET @datetime = '01/01/40'

SELECT YEAR (@datetime)

SET @datetime = '01/01/50'

SELECT YEAR (@datetime)

How SQL Server interprets each year to four-digit years?

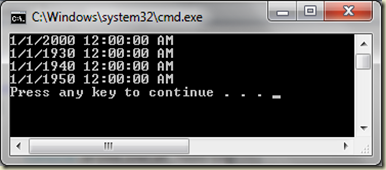

In order to answer this question, you need to know the two digit year cutoff which SQL Server uses for determining the year. The default value of two digit year cutoff is 2049. If you pass a two-digit year that is less than or equal to last two digits of cutoff year (2049), SQL Server uses the same century as the cutoff year. Here is the result of above query;

Since the 50 is above cutoff year, it has been interpreted as 1950. All other two-digit years are set with century of the cutoff year.

You can change the cutoff year if required. This option appears only if “show advanced options” is enabled via sp_configure. If “show advanced options” is not enabled, enable it. Then, set the value you need for “two digit year cutoff”. The below code sets it as 2060.

-- enable advanced options

sp_configure 'show advanced options', 1

GO

reconfigure

-- set two digit year cutoff as 2060

sp_configure 'two digit year cutoff', 2060

GO

reconfigure

Let’s run the query again and see;

As you see, 50 is less than current cutoff year. Therefore even last value is taken as 2050. Change it back to 2049 to maintain the backward compatibility.

You need to know one more thing on this setting. If you are accepting year values via an OLE Automation object, you need to be aware that its cutoff year is 2030, not 2040. For an example, if a .NET application is used for sending values for a stored procedure and values are set as two-digit years, you may notice unacceptable years. Look at below code and see. If two-digit values are less than last two digit of cutoff year, it uses century of cutoff year.

DateTime dt;

dt = Convert.ToDateTime("01-01-00");

Console.WriteLine(dt.ToString());

dt = Convert.ToDateTime("01-01-30");

Console.WriteLine(dt.ToString());

dt = Convert.ToDateTime("01-01-40");

Console.WriteLine(dt.ToString());

dt = Convert.ToDateTime("01-01-50");

Console.WriteLine(dt.ToString());

No comments:

Post a Comment