Wednesday, October 28, 2009

Native OLE DB\SQL Server Native Client 10.0 does not list out the available databases

This held our work for few hours. When we tried to make a connection to SQL Server 2008 server with “Native OLE DB\SQL Server Native Client 10.0” provider from a developer’s machine through SSIS, it did not list out available databases, and did not allow to connect to a database even the name of the database is just typed in the “Select or enter a database name” input box. We doubt that the problem was related to some network problem or to some installed components but, since it allowed to connect with “Native OLE DB\Microsoft OLE DB Provider for SQL Server”, thought that it is related to “SQL Server Native Client 10.0”.

We spent hours to find the reason but could not find any issue related to SQL Server components. Though the connection can be established with “Microsoft OLE DB Provider for SQL Server”, since it does not allow to run some of the new TSQLs and specially it does not recognize “Date” data type properly, we had to dig deeper….

Finally, my colleague Buddhika found the issue. Windows Firewall Settings was blocking the Native Client 10.0 requests. Once it is turned off, connection could be established. Again, turning off the settings is not a good practice, we tried to find a way to make a connection while Firewall Settings is on, and we found. Simply, the TCP port 1433 added as an exception for the Firewall Settings. It worked.

So, in shorter form, if you cannot make a connection to SQL Server 2008 with Native OLE DB\SQL Server Native Client 10.0” provider, make sure that the SQL Server listening port is added as an exception with Windows Firewall Settings.

I am sure that we will be getting the same with Analysis Services too, and adding TCP port 2383 as an exception might solve the issue.

Tuesday, October 27, 2009

Slowly Changing Dimension: Char for Type II Attributes

I used to use Char data type for character data types of variable length columns, if the maximum number of characters that will set for the attribute is less than 10. This is what most DBAs/DBEs do. Not only the maximum length, there are few more considerations for using Char instead of Varchar for character data types of variable length. One would be, when the length of all data values is approximately same.

I made a mistake; this cannot be applied in everywhere, especially in Relational DW with Type II attributes. For example, if I have to make a dimension table with Marital Status that contains either Single or Married, I can make the column as either varchar(8) or char(8), so I made it as char(8) because I prefer to make my design according to experts suggestions. Unfortunately this is a Type II attribute which is designed to maintain history, hence when the record contains “Single”, even though the same value comes with a new data set, it considers as a changed (because of the length) and inserts a new record, making the old record as a historical record and it continues with next data set...

It unnecessary adds new records to the table making the old ones as historical records.

So, I made the all the Type II columns as varchar columns that were set as char to solve the issue, what do you think, any suggestions, any thoughts on this?

Thursday, October 22, 2009

Another SQL Server new course.

Last week, two batches, one for 2780B (SQL 2005) and another for 6231A (SQL 2008) were successfully completed and will be starting my next class, most probably within next two weeks, for 2778: Writing Queries with Microsoft SQL Server 2008, at NetAssist. If you interest to join my class, you are welcome.

Although I started my conducting classes on.NET, last two years I have been doing classes, workshops only for SQL Server and Business Intelligence. Just thought to focus on two more subjects. Working closely with one of the reputed institutes regarding these two subjects, hope will be able to start them by January, 2010.

USER_NAME(), SUSER_NAME(), ORIGINAL_LOGIN()

Sometimes, we switch the execution context to different account when required. One of requirements when connected in such a manner may be, finding out the original account. This can be retrieved from ORIGINAL_LOGIN function. Not only this, the other functions such as USER_NAME, SUSER_NAME are useful too, if you need to info return from them.

Thought to put down a small code, just to show the different between these functions; Login as "sa" and execute...

-- Create a login and a user

USE master

GO

CREATE LOGIN TestLogin WITH PASSWORD = '123', CHECK_POLICY = OFF

GO

USE AdventureWorks

GO

CREATE USER TestUser FROM LOGIN TestLogin

-- Test the functions

-- Returns dbo

SELECT USER_NAME()

-- Returns sa

SELECT SUSER_NAME()

-- Switch the execution context

EXECUTE AS LOGIN = 'TestLogin'

-- Returns TestUser

SELECT USER_NAME()

-- Returns TestLogin

SELECT SUSER_NAME()

-- Returns sa

SELECT ORIGINAL_LOGIN()

REVERT;

USER_NAME: Returns the current user in the current context. If the user_id is submitted, returns the name of the given id.

SUSER_NAME: Returns the current login in the current context. If server_user_id is submitted, returns the name of given id.

ORIGINAL_LOGIN: Returns the original login in the session in which there are many implicit or explicit context switches.

Tuesday, August 25, 2009

New class on 2780B

I started another class for course 2780B - Maintaining a Microsoft SQL Server 2005 database. Though I wanted to do this on 2008 (6231A), this has been selected by the institute for participants because most of them are using 2005. In a way, it is good, because, doing another class on 2008 (6231A).

Doing three classes per week is bit difficult, but I like it, getting more invitations, planing to have more classes.... this might stop me, attaching permanent to a company.

Monday, August 17, 2009

Microsoft XPS Document Writer cannot be found

I am experiencing this error with Microsoft PowerPoint 2007, when the hibernated machine which had PowerPoint presentation opened, is started. Searched for a solution but no luck so far, and many face this it seems.

Anyone know the solution?

Sunday, August 16, 2009

Are you using "Windows Services window" for changing the SQL Server Service Account?

If you are using the "Windows Services" window for changing the assigned account for SQL Server service, you may face some problems later. When required, we should use SQL Server Configuration Manager, instead Windows Services because the Configuration Manager performs required operations such as configuring SQL Server related configurations, re-generating Service Master Key and decrypts and encrypts all objects that had been encrypted by Service Master Key. If you do not use Configuration Manager, you need to execute ALTER SERVICE MASTER KEY statement manually for completing the operation.

Monday, August 10, 2009

PerformancePoint error: Unable to connect to Server - Part II

Once I blogged about this error with this link. Today I faced the same, but the reason was different.

My new machine is installed with Vista-Ultimate 64-bit. I started installing all the prerequtities for PerformancePoint, did everything. Once installed, tried to connect to http://localhost:40000/WebService/PmService.asmx through Dashboard Designer (Options box), but no luck. It gave an error saying "You have not admin privileges.".

I had logged in as "Dinesh" and the account "Dinesh" is in Administrators group. I made sure again, that "Dinesh" is in Administrators group and "Dinesh" has permissions for the PerformancePoint web service too. I still do not know, why "Dinesh" could not make the connection..... he is in the Administrator group.

I solved the problem by logging to the system as an Administrator. Once logged in, I was able to connect and then I used the "Permission" box for adding "Dinesh" as an Admin to the PerformancePoint. When I log in with "Dinesh" now, it allows to make the connection now.

I think, by default, Administrator is added as the Admin of the PerformancePoint, so, he is the only person who can give permission to others. Keep this in mind, if you use some other account without using Administrator.

Saturday, August 8, 2009

Connecting to Analysis Services with the Port Number

First of all, let me tell you that why I need to connect to Analysis Services with the Port number; because the Analysis Services 2008 is running as a named instance and SQL Server Brower needs to be disabled as a security measure. Why do we need SQL Server Browser Services?

By default, Analysis Services uses the port 2383. When you connect to the default instance (in my case, it is 2005), it connects to the SSAS without any problems, even though you have not specified the port with the connection string. If you try to connect to a named instance of Analysis Services (again, in my case, it is 2008), without specifying the port, you will still be able to connect, as long as the SQL Server Browser is running. Named instances of the SSAS use port numbers that are dynamically assigned, therefore they are varies. When you restart the service, it assigns a new one. Assigned port number can be seen with msmdredir.ini file in the C:\Program Files (x86)\Microsoft SQL Server\90\Shared\ASConfig folder. Though we do not specify the port in the connection string (even with SSMS), SQL Server Browser handles the request and directs us to the correct instance, because it knows the port numbers assigned.

Now my issue was, unavailability of SQL Server Browser hence cannot connect to the named instance. When we try to connect to the named instance with SSMS or BIDS, it says that Browser is not running. Since the Browser cannot be enabled (as a security measure), all we have to do is, find the port number (from the ini file specified above) and specify with the connection string. One thing you have to remember is, when specifying, it should be {MachineName}:{PortNumber}, NOT like {InstanceName}:{PortNumber}. If my machine name is DINESH-PC and named instance is SQL2008;

When connecting to the default instance: DINESH-PC:2383

When connecting to the named instance: DINESH-PC:50019, NOT DINESH-PC\SQL2008:50019

If need to fix a port number, use the ini file or Port property in Analysis Services, for changing.

Wednesday, August 5, 2009

Foreign Keys with TinyInt do not recongnize by SSAS

This is still there, with SQL Server 2008 too. I usually use TinyInt for tables' primary key when the number of records to be held are less than hundred, so applied the same rule for my newest Data Warehousing project, completely forgetting the fact that Analysis Services cannot recognize the relationships between Fact and Dimensions that have TinyInt as the primary key.

As usual, had to change all my Dimensions tables, changing primary keys to Smallint. Remember this, if you are designing a data warehouse.

Restoring one File Group

Today, I came across a situation where I had to explain the FILEGROUP restore of SQL Server. Since I have not done this for a long time, I brushed my knowledge with Preethi's help and thought to blog it too.

If you have a database that has more than one file group, you may face to a situation where you need to restore only one file group, without restoring the entire database, saving time. One thing we have to remember is, secondary file group (or file) restore needs Primary file group restore. If your Primary file group contains a lot of tables, again, it takes time for restore operation (Usually, database that contains secondary file groups does not use primary for user-defined tables). So, make sure that you do not use Primary file group for your tables.

Here is an example: Assume that we have a database that has three file group: Primary, FG1, and FG2. In order to restore file groups, you should have backups of all file groups (both full and differential are possible). Here are steps for restoring a file group (FG1);

-- Backup tail-log if require

BACKUP LOG MyDatabase TO DISK = 'D:\Backups\TailLog.bak' WITH NO_TRUNCATE, NORECOVERY

-- Restore the Primary file group

RESTORE DATABASE MyDatabase FILEGROUP = 'Primary' TO DISK = 'D:\Backups\MyDatabase_FG1.bak' WITH NORECOVERY

-- Restore the FG1 file group

RESTORE DATABASE MyDatabase FILEGROUP = 'FG1' TO DISK = 'D:\Backups\MyDatabase_FG1.bak' WITH NORECOVERY

-- Restore the log (if need, can be restored to a specific time)

RESTORE LOG MyDatabase FROM DISK = 'D:\Backups\TailLog.bak' WITH RECOVERY

Thursday, July 16, 2009

Was really busy

Had no time to update my blog for few weeks, was really busy with work:

- New BI project: This is for one of the leading banks in Sri Lanka. This is the first time I am engaging with banking industry, in terms of BI. Lot of things to learn, really interesting.

- New set of SQL Server questions: It is almost done, this is the third time I involved with questions making. I have already written questions to two Microsoft SQL Server 2008 exams and this is for, again Microsoft but not for MS certifications. Anyway, all questions have been reviewed, seems they are happy with my questions :).

- New SQL Server 2005 batch: Done. Conducted another class for developers. Students were good, though there were gaps in knowledge levels.

- New SQL Server 2008 batch: Really interesting, this is my first SQL Server 2008 class. Participants are really good, lot of questions. This course is bit different, have not taught Maintaining SQL Server course (6231A) before. I am not much comfortable with the official materials, seems course book is not so rich and found some mistakes too, anyway it is too early to make comments on it.

Wednesday, May 27, 2009

Another victory of Sri Lanka

This clearly shows the reality, this shows who wants to destroy the great country, Sri Lanka. Sri Lanka won again, defeating the international terror.

How they voted at the UNHRC sessions

Victory for Lanka’s fight against terrorism

Thursday, May 21, 2009

PerformancePoint error: Unable to connect to Server

Are you experiencing this? You might. There are different set of connection related errors with PerformancePoint 2007. If you get errors like "Unable to connect to the specified server. Make sure the address is correct" or "The PerformancePoint could not connect to the specified data source", most probably, you will find the solution here.

If you face for an error like "Unable to connect to Server" with PerformancePoint dashboard designer options, the reason might be the unavailability of AJAX 1.0. Solution is here. It has another solution but installing AJAX fixed my problem.

Wednesday, May 20, 2009

Skipping prerequisities when installing PerformancePoint 2007 SP2

While installing the SP2 of PerformancePoint 2007, you may get an error like;

Failed package requirement analysis, please refer to the log file for more information.

This can be avoided by setting the SKIPREQCHECK as true with msiexec command. But you have remember one thing, when we install the product we use i switch but p switch has to be used when installing service packs. Please see the code below;

Installing the product, skipping the prerequisites;

msiexec /i PSCSrv.msi SKIPREQCHECK=1

Installing the service pack, skipping the prerequisities;

msiexec /p PSCSrv.msi SKIPREQCHECK=1

Tuesday, May 19, 2009

Installing PerformancePoint 2007 SP2

If you are using PerformancePoint 2007 and you have not installed the SP2, then this is the time for installing it. As you know, it did not support natively for SQL Server 2008, and now it allows. You will be installing this SP2 under three scenarios;

1. Update PerformancePoint Server with SP2 only. No changes to SQL Server 2005.

2. Update SQL Server 2005 to SQL Server 2008 and then update PerformancePoint Server to SP2.

3. Install PerformancePoint Server RTM and SQL Server 2008 in a new environment.

You need to make sure that you follow the correct steps for whatever the scenario you select.

You can find the instruction for all scenarios here.

You can download the SP2 from here(x86) and here(x64).

Monday, May 18, 2009

Sri Lanka is free from Terror

Saturday, February 28, 2009

Another BI workshop

This is the first workshop organized by IronOne Technologies. It was started on last Thursday and will continue till this Tuesday. I feel bit tired because it is very difficult to keep on talking :), without a partner. Almost all workshops that were organized before, were done by Gogula and me, hence work was shared, doing it alone is a very difficult task.

Audience of the workshop is really good, some have work on BI related projects too. Everybody exposes an average knowledge, hence it is not so difficult to run the workshop.

Thursday, February 12, 2009

How to distinguish between measures and KPIs…

As you all know, measures are identified at the analysis stage of Data Warehouse/BI project. Are all identified measures KPIs? This question came up, while we were having a discussion on one of our new projects. It was an interesting question, thought to make a post on it, showing what I think, what I have read…

In a way, yes, we can consider all measures as KPIs. But they become proper KPIs when it shows the quality of the value, health of the value. This requires another attribute to be attached:

Let’s take an example, Sales Amount, obviously it is a measure. It shows the sales amount for some dimensions (for current year, for a product). Does it show the health of that value, whether it is good or bad? No, it is just a value. Important thing to remember is, KPI is not just a value, it indicates the health of the value too. So, if we need to make Sales Amount as a KPI, we need to use another attribute such as Target. Target value allows to judge the Sales Amount whether it is healthier or not, hence Sales Amount becomes a KPI.

That’s how I see the difference between a measure and a KPI? Am I right? Any thoughts on this?

Wednesday, February 4, 2009

Is your architecture leading to Stovepipe data mart?

Question arose while we were discussing BI. I asked myself, whats the hell is "Stovepipe" data mart, I was aware of the word but picture behind it, did not come to my mind immediately. So, brushed up....

Stovepipe data marts are nothing but data marts that prevent integration of themselves for making a data warehouse because of the unavailability of conformed dimensions. In most cases, data marts (dimensions in data marts) are designed with their own rules, policies and mainly own structures. This results incompatible dimensions in different data marts though they represent same entity. It will be a mess, will be awkward situation when it comes for making a data warehouse, combining all data marts. Though data marts have many advantages, cost-wise, time-wise, etc.. this is the major disadvantage of them. Are you leading to a Stovepipe data mart?

Sunday, February 1, 2009

SSRS 2008 Video: Formatting text blocks individually in a single textbox

This is my first video, had been planning for months, and somehow was able to do one. I do not know whether the quality of this lesson is okay, because I made many mistakes while recording, this is my 8th or 9th recording :). This shows one of the newest features of Reporting Services 2008. Reporting Services 2005 did not allow us to format text blocks in a textbox with different styles. Now it is possible. Watch the video and see whether it is useful. Appreciate your comments on this.

Friday, January 30, 2009

REPEATABLE READ is fine, but want to let others to read non-locked records

We usually set the REPEATABLE READ Transaction Isolation Level in order to overcome Inconsistent analysis (nonrepeatable read) and Lost Update concurrency problems. This level do not let other users to read records that are read by your transaction, but creates a deadlock situation. Here is a simple example;

This code gets the first record available for updating (TOP 1, IsUpdated = 0) and does some processing, finally updates the record. This code does not allow others to modify the record while it is being accessed, avoiding inconsistent analysis and lost updates. But it reduces the concurrency. It does not allow other to get the second available record too, making a deadlock situation if the same is executed by another connection. There is no harm of letting others to access the second available record. How do we do it?

This cannot be implemented with REPEATABLE READ isolation level. All we have to do is, use UPDLOCK and READPAST query hints. UPLOCK puts exclusive lock on the object and does not allow others to obtain a lock on the same. READPAST skips all locked records and read the non-locked records. Here is the corrected code;

This locks the record while it is being accessed but allows to process the next available record for others.

1: SET TRANSACTION ISOLATION LEVEL REPEATABLE READ

2: BEGIN TRAN

3: DECLARE @Id int

4: SELECT TOP 1 @Id = Id

5: FROM dbo.Employee

6: WHERE IsUpdated = 0

7: 8: ......... -- more processing

9: 10: UPDATE dbo.Employee

11: SET Info = 'some text', IsUpdated = 1

12: WHERE Id = @Id

13: COMMIT TRAN

1: -- SET TRANSACTION ISOLATION LEVEL REPEATABLE READ

2: BEGIN TRAN

3: DECLARE @Id int

4: SELECT TOP 1 @Id = Id

5: FROM dbo.Employee

6: WITH (UPDLOCK, READPAST)

7: WHERE IsUpdated = 0

8: 9: ......... -- more processing

10: 11: UPDATE dbo.Employee

12: SET Info = 'some text', IsUpdated = 1

13: WHERE Id = @Id

14: COMMIT TRAN

Friday, January 23, 2009

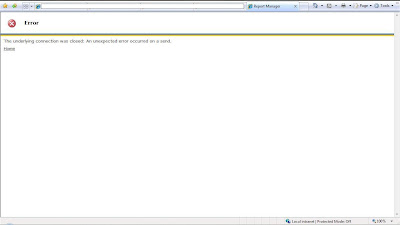

Reporting Services 2008 Error: The underlying connection was closed. An unexpected error occured on a send.

It hit me again. This does not allow you to deploy any reports or open report manager via the browser. This is because of the invalid configuration in reportserver.config file. The default value of the key SecureConnectionLevel has been set as 2, which requires SSL configuration. If you do not use SSL, make it as 0, making service least secure. Refer BOL for more info.

It hit me again. This does not allow you to deploy any reports or open report manager via the browser. This is because of the invalid configuration in reportserver.config file. The default value of the key SecureConnectionLevel has been set as 2, which requires SSL configuration. If you do not use SSL, make it as 0, making service least secure. Refer BOL for more info.

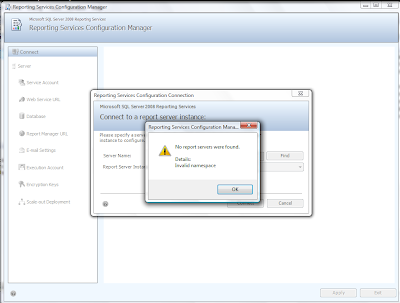

Reporting Services 2008 Error: Invalid namespace

This error comes when we try to configure Reporting Services 2008 with Reporting Services Configuration Manager. The reason for this is funny and identified as a known issue; It checks for wrong instance name. This has been fixed with the Cumulative Update Package 3.

If you are unable to download, another method has been given with this which I used. It gives instructions for changing the reportingservices.mof file.

This error comes when we try to configure Reporting Services 2008 with Reporting Services Configuration Manager. The reason for this is funny and identified as a known issue; It checks for wrong instance name. This has been fixed with the Cumulative Update Package 3.

If you are unable to download, another method has been given with this which I used. It gives instructions for changing the reportingservices.mof file.

Subscribe to:

Posts (Atom)