Are you seeing many “sleeping” status connections in your SQL Server? If so, have you ever thought to find out the reason for it? Is it normal? Does it indicate some sort of a problem related connection?

This topic was discussed yesterday when I was at one of clients sites. They had seen this with their SQL Server and had already been sorted out. Understanding the standard behavior and making sure everything goes properly would help us all for making the solution efficient, hence making this post.

Sleeping means that the connection is established and not closed, not active, and waiting for a command. This is normal because most of application keep the connection even though the business is done, in order to reduce the cost of opening and closing connections. The main purpose of these connections is, reusability. For example, if an application uses connections for retrieving data, the cost of the connection establishment can be minimized if existing one can be used without creating one again. Maintaining a lot of sleeping connection is an overhead? In a way, yes it is, though it is comparatively low. If everything is properly coded, you should not see many sleeping connection. Are we seeing sleeping connection which cannot be reused? Yes, there could be, and we should avoid such situation.

Testing with a Console Application

K, Let’s see how it happens. The code given below is written as a console application. It opens a connection and executes a command. Finally it closes the connection.

1: SqlConnection sqlConnection = new SqlConnection("Server=(local);Database=TestDatabase;UId=Test;Pwd=password;");

2: sqlConnection.Open();

3:

4: SqlCommand sqlCommand = new SqlCommand("select id from dbo.Employee", sqlConnection);

5: int id = (int)sqlCommand.ExecuteScalar();

6: sqlConnection.Close();

7: Console.WriteLine("Press enter to close.");

8: Console.ReadLine();

9:

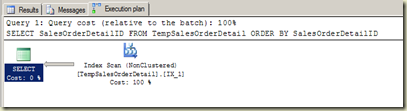

Start debugging the code. Execute up to 3rd line. Now you have opened the connection. Let’s see how SQL Server maintains this connection now. Open Query Window and run sp_who for user Test.

Note that the connection is established and it is under master database, though we made connection to TestDatabase. Now execute the .NET code up to 6th line. Do not execute the 6th line. Run sp_who again and see.

Now the connection is set for correct database and command is executed too. Still connection is maintained by SQL server and waiting for a command because SQL Server has not received instructions for closing this. Now execute the 6th line, in order to close the connection. Run sp_who again and see. Has it been removed? NO, connection is still there with the status of sleeping. The reason is ADO.NET has not instructed to remove the connection yet, hence SQL Server still maintains it. Complete the execution and see again. Now you are not going to see the connection.

Am I supposed to call “Dispose” method?

Now we have a question. We closed the connection with sqlConnection.Close() method but instruction has not gone SQL Server. Should we call Dispose()? Have sqlConnection.Dispose(); between 6th and 7th lines and run the code again. Monitor the connection with sp_who as we did with previous exercise. Check the connection once newly added line is executed (before completing the full execution). Are you still seeing the connection, even after calling Dispose method? Yes, you should see it.

What we can understand with this testing is, the connection is maintained until we completely close the application. In other words, connection is removed when the objects are garbage collected (my assumption here is, dispose method does not immediately garbage collection the object). Why is that? This is the way to achieve reusability. ADO.NET can reuse the connection, without recreating, if need it again. See the below code.

1: SqlConnection sqlConnection = new SqlConnection("Server=(local);Database=TestDatabase;UId=Test;Pwd=password;");

2: sqlConnection.Open();

3:

4: SqlCommand sqlCommand = new SqlCommand("select id from dbo.Employee", sqlConnection);

5: int id = (int)sqlCommand.ExecuteScalar();

6: sqlConnection.Close();

7:

8: sqlConnection = new SqlConnection("Server=(local);Database=TestDatabase;UId=Test;Pwd=password;");

9: sqlConnection.Open();

10:

11: sqlCommand = new SqlCommand("select 1", sqlConnection);

12: id = (int)sqlCommand.ExecuteScalar();

13: sqlConnection.Close();

14:

15: Console.WriteLine("Press enter to close.");

16: Console.ReadLine();

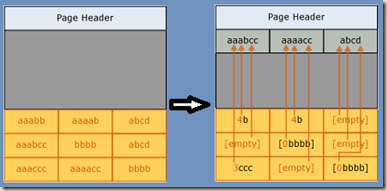

In this code, we make two connections. At the 9th line we open another connection. Once the 9th line completed, run sp_who again and see. Are there two connections? No, ADO.NET uses the same connection which was used for the first command. It uses connections available in the pool. Yes, we call it as Connection Pool, or the process is referred as Connection Pooling. One factor to determine whether the connection can be reused is, connection string. The connection string used for the new connection should match with existing connections in the pool. If ADO.NET cannot find a matching one, new connection will be added to the pool and it will be used for executing the command. That’s how new connections get added to the pool. That is one factor!

Connection string is same but it is not used, it adds a new one

There is another factor for adding a new connection to the pool without using an existing one. This can happen even with the same connection string. Remove 6th line from above code (closing code) and debug. Once the 9th line is executed, check for connections. This is what I see;

New connection has been created, means the one we created before cannot be used. The reason for it is, we have not closed the connection, it is as an “unusable-connection”. If the connection is not closed, it cannot be reused even though the connection string is same. So, this could be one of the reasons for seeing many sleeping connections. In this case, it is NOT normal and wasting resources in the system. If you continue with same type of codes, you will end up with many number of sleeping connections. So make sure you close your connection once the command is executed.

Making sure that connection are reused

Is there a way to see whether sleeping connections are being used? Yes, there is way. It can be monitored with SQL Server Profiler. Open SQL Server Profiler and connect with your SQL Server instance. Use the standard template which include RPC:Completed for Stored Procedures. Select all columns (You may have to uncheck selected events and check again after) and filter for your testing database. Now start the trace and run the above code again. You should see “exec sp_reset_connection” which indicates that connection is reset, in other words, it is used for the new connection.

I do not want to reuse connections

If you ever need NOT to reuse connections and get rid of sleeping connections, there is a way. All you have to do is, adding Pooling=False; to the connection string. This forces to remove the connection as soon as the close method is called. Though this is possible, it is NOT recommended.

How about ASP.NET Application?

As you have noticed, connections are removed from SQL Server once the Console Application is closed. Do not expect same from ASP.NET applications. Though you close the browser, connections are maintained with sleeping status until the connection lifetime is expired (correct me if I am wrong).

Connection removal

Connections in the pool are removed based on two factors. One is Connection Lifetime which can be set with the connection string. The default value is 0, means no specific time. Other factor is validity of the connection. If the connection in the pool does not communicate with server, it is marked as an invalid connection. The Connection pooler scans the pool periodically and sees for invalid connection. If found, they are released from the pool.

What if I create 101 connections without closing?

You might have seen, experienced this error;

Timeout expired. The timeout period elapsed prior to obtaining a connection from the pool. This may have occurred because all pooled connections were in use and max pool size was reached

The default maximum number of connection can be added to the pool is 100 and this can be adjusted. Does it mean that we can create only 100 connections without closing (or with different connection strings)? Actually NO. Have this code in ASP.NET page and run. Then check with sp_who for number of connections with sleeping status.

1: SqlConnection sqlConnection = new SqlConnection("Server=(local);Database=TestDatabase;UId=Test;Pwd=password;");

2:

3: for (int x = 0; x < 110; x++)

4: {

5: sqlConnection = new SqlConnection("Server=(local);Database=TestDatabase;UId=Test;Pwd=password;");

6: sqlConnection.Open();

7:

8: SqlCommand sqlCommand = new SqlCommand("select id from dbo.Employee", sqlConnection);

9: int id = (int)sqlCommand.ExecuteScalar();

10: }

This code creates 110 connections and guess what? It works fine. When I check with SQL Server for Test login, I can see only 87 connections. If you change the 110 as something like 150, then definitely you get the error and you will see 100 connections in SQL Server. Just to make sure that the code is working fine, I started the trace and ran the code again. I could clearly see the new connections, and “exec sp_reset_connection” too. This means that though the connection is unusable (because we have not closed), it is reset for a new connection. The factor or criteria for this behavior is still unknown for me, will update the post if the reason is found. Appreciate if you can share your thoughts on this if reason is known to you.

.