We have been developing data warehouses, centralizing enterprise data and addressing business intelligence requirements. Generally, almost all data warehouses built were based on traditional architecture which is called SMP: Symmetric Multi-Processing. Even though we use different design strategies for designing data warehouses for improving the performance and managing the volume efficiently, the necessity on scaling up often comes up. No arguments, without much considerations on factors related, we tend to add more resources spending more money for addressing the requirement but at a certain level, we need to decide, we need understand that the existing architecture is not sufficient enough for continuation, it needs a change, SMP to MPP.

What is SMP architecture? This architecture is a tightly coupled multi-processors that share resources, connecting to a single system bus. With SMP, system bus limits scaling up beyond a certain limit and, when number of processors and data load increases, the bus can become overloaded and a bottleneck can occur.

MPP, Massively Parallel Processing is based on shared-nothing architecture. MPP system uses multiple servers called Nodes which have dedicated, reserved resources and executes distributed queries with nodes independently offering much performance than SMP.

How do we know the boundary or what factors can be used for determining the necessity of MPP? Here are some, these will help you to decide.

Here is the first one;

This is all about data growth. Yes, we expect an acceptable data growth with data warehousing but if it increases drastically, and if we need to continuously plug more and more storage, it indicates a necessity of MPP. We are not talking about megabytes or gigabytes but terabytes ore more. Can't we handle the situation just adding storage? Yes, it is possible but there will be definitely a limit on it. Not only that, the cost goes up too. We do not see this limitation with MPP and in a way, adding additional storage after the initial implementation might not be as expensive as SMP.

Here is the second;

If somebody talks about BI today, the aspect of Real-time or Near-Real-Time is definitely a concerned area. Traditional implementation of data warehouse manages this up to some extent but not fully, mainly because of the capacity, loading and complexity of the query. Generally, with Microsoft platform, we use SSIS for data loading and de-normalized, semi-normalized tables designed either as general tables or star/snowflake structured tables for holding data. Assume that user requests real-time data and same fact table that contains billions of records have to be accessed, then performance of the query might not be at the expected level. With MPP, since data can be distributed with multiple nodes, performance on data retrieval is definitely fast and real-time queries are efficiently handled.

Third one;

Traditional data warehouse requires structured, in other words known relational formatted data. However modern BI is not just based on this, data with unknown structures are not rare and often required for most of the analysis. How do we handle this? One data warehouse for known, structured data and another for unstructured data? Even though we maintain two data warehouses, how an analysis can be performed combining these two? Can traditional tools attached with exiting architecture be used for combining them efficiently, process them fast and produce required result? No, it is not possible, and it means it is high time for MPP. This does not mean that MPP handles all these area but it supports. MPP helps to process unstructured data much efficient than SMP and Microsoft platform allows to combine structured and unstructured data with user-friendly interface using its solution which based on MPP.

Here is the forth:

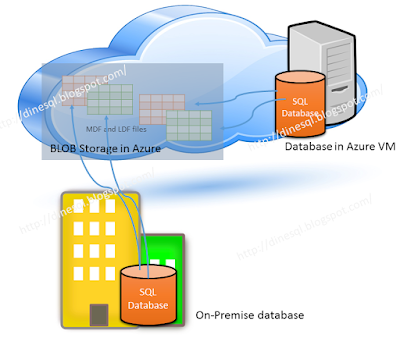

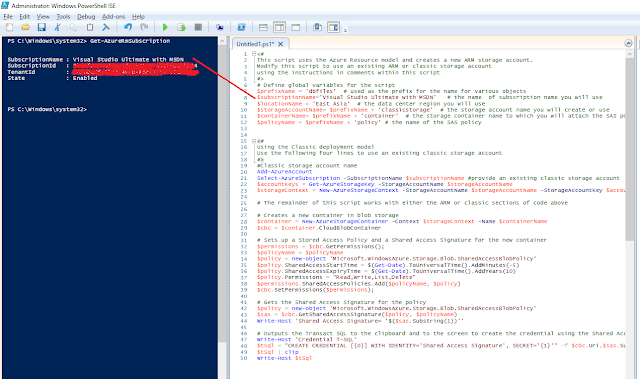

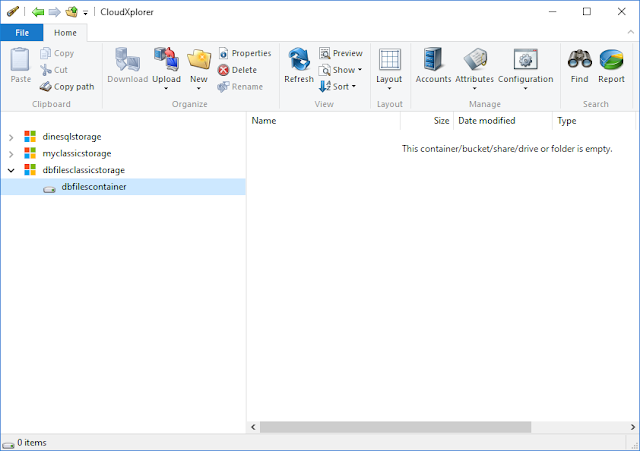

Mostly, we extracts data from on-premises data storage and traditional ETLing handles this well. However, data generation is not limited to on-premises applications with modern implementation, many important data is generated with cloud applications. In a way, this increases the load as well as the complexity. And sometime this changes traditional ETL into ELT. MPP architecture has capabilities to handle these complexities and improve the overall performance, hence this reason can be considered as another reason for moving from SMP to MPP.

There can be some more reasons, but I think these are the significant ones. Please comment if you see more on this.