Friday, September 8, 2017

SQL Server - Dropping Primary Key will drop the Clustered Index?

Thursday, April 13, 2017

SQL Server Splits the page when I change an Integer Column Value

-- creating test table

CREATE TABLE dbo.TestTable

(

Id int PRIMARY KEY

, Value char(4000) NOT NULL

)

GO

-- inserting 2 records

INSERT INTO dbo.TestTable

VALUES

(2, replicate('a', 4000)), (5, replicate('b', 4000));

SELECT Id, Value, ph.* FROM dbo.TestTable CROSS APPLY fn_PhysLocCracker(%%PHYSLOC%%) ph;

UPDATE dbo.TestTable

SET Value = replicate('x', 4000)

WHERE id = 2

SELECT Id, Value, ph.*

FROM dbo.TestTable

CROSS APPLY fn_PhysLocCracker(%%PHYSLOC%%) ph;

Just like that, if we make a change to the integer value, we should not see any change on pages used. Let's make a small change and see.

UPDATE dbo.TestTable

SET Id = 3

WHERE id = 2;

SELECT Id, Value, ph.*

FROM dbo.TestTable

CROSS APPLY fn_PhysLocCracker(%%PHYSLOC%%) ph;

Did you notice the change? Why the second record has been moved to a new page? Mean Page-Split has been occurred with this changed?

Is it because of the data type? It is not. Note that this is not just an integer column. It is the PRIMARY KEY; The clustered key. What happened was, SQL Server updated the Value column as an in-place update. It does not require additional space. However SQL Server does not perform in-place update for key columns. It performs delete-plus-insert operation for key column updates. It requires space for the INSERT. That is the reason for the page split. Understand that this behavior is not only for integer, it is for all key columns.

This is why we discourage you to select a column as the key column if it can be changed by a business operation. It is always recommended to use a non-volatile column as the key column that do not expect changes on values.

SQL Server - Checking Index Fragmentation - Understanding LIMITED, SAMPLED and DETAILED

| Mode | Details |

|---|---|

| LIMITED |

|

| SAMPLED |

|

| DETAILED |

|

Wednesday, February 22, 2017

How to change the default Fill Factor value of SQL Server instance

SP_CONFIGURE;

USE tempdb; GO CREATE TABLE dbo.Customer ( CustomerId int PRIMARY KEY , LastName varchar(20) NOT NULL INDEX IX_Customer_LastName NONCLUSTERED ); GO

You can change the default value using sp_configure. Once it is change, you need a restart of the service in order to get the value applied.

SP_CONFIGURE 'fill factor (%)', 80;

Friday, December 30, 2016

Inserting records, ignoring duplicates, recording them

USE tempdb;

GO

-- Creating a table for Customers

IF OBJECT_ID('dbo.Customers') IS NOT NULL

DROP TABLE dbo.Customers;

GO

CREATE TABLE Customers

(

Title char(5) NOT NULL

, FirstName varchar(50) NOT NULL

, MiddleName varchar(50) NULL

, LastName varchar(50) NOT NULL

, EmailAddress varchar(100) NOT NULL

)

-- Addin the unique index on the Email Column

CREATE UNIQUE INDEX IX_Customers ON dbo.Customers (EmailAddress)

-- Inserting one customer for testing

INSERT INTO dbo.Customers

(Title, FirstName, MiddleName, LastName, EmailAddress)

VALUES

('Mr.', 'Dinesh', NULL, 'Priyankara', 'dinesh@dinesql.com')

-- This batch contains 4 records, note the 2nd and 3rd record -- They have the same emial address INSERT INTO dbo.Customers (Title, FirstName, MiddleName, LastName, EmailAddress) SELECT 'Mr.', 'Yeshan', NULL, 'Santhush', 'yeshan@dinesql.com' UNION ALL SELECT 'Ms.', 'Jane', NULL, 'Western', 'jane@dinesql.com' UNION ALL SELECT 'Ms.', 'Jane', NULL, 'Black', 'jane@dinesql.com' UNION ALL SELECT 'Mr.', 'Jack', NULL, 'Knight', 'jack@dinesql.com'

-- dropping and recreating the index with IGNORE_DUP_KEY option DROP INDEX IX_Customers ON dbo.Customers GO CREATE UNIQUE INDEX IX_Customers ON dbo.Customers (EmailAddress) WITH (IGNORE_DUP_KEY = ON) -- checking the insert again. It inserts all except the duplicate INSERT INTO dbo.Customers (Title, FirstName, MiddleName, LastName, EmailAddress) SELECT 'Mr.', 'Yeshan', NULL, 'Santhush', 'yeshan@dinesql.com' UNION ALL SELECT 'Ms.', 'Jane', NULL, 'Western', 'jane@dinesql.com' UNION ALL SELECT 'Ms.', 'Jane', NULL, 'Black', 'jane@dinesql.com' UNION ALL SELECT 'Mr.', 'Jack', NULL, 'Knight', 'jack@dinesql.com'

- Load all email addresses to a Temporary Table or Table Variable before performing the main operation. After main Insert Operation, do a comparison using LEFT OUTER JOIN to find out records that were not inserted.

- Use Integration Services for the operation. It has allows you to capture problematic records using error handlers or can duplicate the flow, record all emails addresses in another container, and do the comparison at the end of the task.

Monday, October 24, 2016

Disable PRIMARY KEY before data loading

USE tempdb;

GO

-- create a table with a primary key which is clustered

CREATE TABLE dbo.Customer

(

CustomerID int identity(1,1) not null

, FirstName varchar(100) not null

, Constraint PK_Customer PRIMARY KEY CLUSTERED (CustomerID)

);

-- create a table with a primary key which is nonclustered

CREATE TABLE dbo.Employee

(

EmployeeID int identity(1,1) not null

, FirstName varchar(100) not null

, Constraint PK_Employee PRIMARY KEY NONCLUSTERED (EmployeeID)

);

-- Insert some records

INSERT INTO dbo.Customer

(FirstName) VALUES ('Dinesh'), ('Yeshan');

INSERT INTO dbo.Employee

(FirstName) VALUES ('Jane'), ('Jack');

-- checking records

-- This should return all recorded we inserted

SELECT * FROM dbo.Customer;

SELECT * FROM dbo.Employee;

-- Disable the primary key of the Customer

-- by disabling associated index

ALTER INDEX PK_Customer ON dbo.Customer DISABLE;

-- Now following statements are not possible

INSERT INTO dbo.Customer

(FirstName) VALUES ('Kate'), ('Mihen');

SELECT * FROM dbo.Customer;

-- Disable the primary key of the Employee

-- by disabling the associated index

ALTER INDEX PK_Employee ON dbo.Employee DISABLE;

-- These statements work without any issue

INSERT INTO dbo.Employee

(FirstName) VALUES ('Nazir'), ('Daniel');

SELECT * FROM dbo.Employee;

-- Enabling both

ALTER INDEX PK_Customer ON dbo.Customer REBUILD;

ALTER INDEX PK_Employee ON dbo.Employee REBUILD;

Sunday, July 24, 2016

SQL Server Brain Basher of the Week #048 - Dropping Clustered Index

-- creating a test table

CREATE TABLE dbo.TestTable

(

TestTableId int index ix_TestTable_Id clustered

, TestTableValue1 varchar(100) not null

, TestTableValue2 char(5) not null index ix_TestTable_Value2 nonclustered

);

GO

-- Inserting test records

INSERT INTO dbo.TestTable

VALUES

(1, 'Hello world', 'ABC01')

, (2, 'Hi', 'ABC02')

-- Checks indexes availeble

SELECT * FROM sys.dm_db_index_physical_stats(DB_ID(), OBJECT_ID('dbo.TestTable'), NULL, NULL, 'DETAILED');

-- Dropping the clustered index

DROP INDEX ix_TestTable_Id ON dbo.TestTable;

-- Checking the indexes and data after dropping

SELECT * FROM sys.dm_db_index_physical_stats(DB_ID(), OBJECT_ID('dbo.TestTable'), NULL, NULL, 'DETAILED');

SELECT * FROM dbo.TestTable;

Sunday, June 28, 2015

Indexes should be dropped before a bulk insert or not

Considering that, how do we determine whether we should drop the indexes before loading or insert all records with indexes in place?

| Indexes | Relative amount of new data |

|---|---|

| Clustered index only | 30% |

| Clustered and one nonclustered index | 25% |

| Clustered and two nonclustered indexes | 25% |

| Single nonclustered index only | 100% |

| Two nonclustered indexes | 60% |

You can read more info on this at: https://msdn.microsoft.com/en-us/library/ms177445.aspx

Sunday, March 22, 2015

SQL Server Brain Basher of the Week #004

CREATE CLUSTERED COLUMNSTORE INDEX IX_Sales ON Sales;

Thursday, March 12, 2015

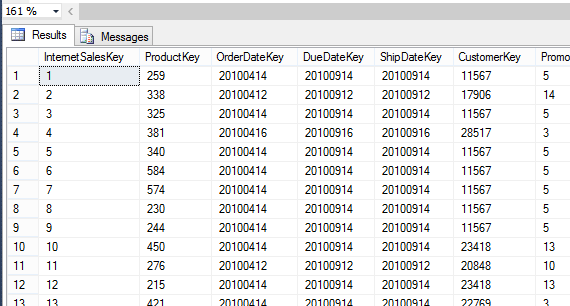

Number of records in Non-Clustered Index Leaf Level is same as records in the table?

Answer is yes. Here is simple code for showing it. This table has 7,301,921 records and leaf level of clustered index holds 7,301,921 records too.

SELECT COUNT(*) FROM dbo.InternetSales;

SELECT index_id, partition_number, index_type_desc, index_level, record_count

FROM sys.dm_db_index_physical_stats (DB_ID('SalesDB')

,OBJECT_ID('dbo.InternetSales'), NULL, NULL, 'DETAILED');

Now, let's create an index on OrderDateKey which contains duplicates.

Now if I execute the following code, it will show that number of records in both indexes are same.

CREATE INDEX IX_Sales_OrderDateKey ON dbo.InternetSales (OrderDateKey);

GO

SELECT index_id, partition_number, index_type_desc, index_level, record_count

FROM sys.dm_db_index_physical_stats (DB_ID('SalesDB')

,OBJECT_ID('dbo.InternetSales'), NULL, NULL, 'DETAILED');

Monday, January 20, 2014

Can a query derive benefit from a multicolumn index for single-column filtering?

Assume that an index has been created using three columns. Will SQL Server use the index if the 2nd column is used for filtering?

I asked this question recently at a session, as expected, answer of majority was “No”. Many think that multicolumn index is not beneficial unless all columns are used for filtering or columns from left-to-right, in order, are used. Order is important but SQL Server do leverage the index even for 2nd and 3rd columns. If your answer for the question above is “No”, here is a simple example for understanding it.

USE AdventureWorksDW2012

GO

-- creating an index using 3 columns

CREATE INDEX IX_DimProduct_1 ON dbo.DimProduct

(EnglishProductName, WeightUnitMeasureCode, SizeUnitMeasureCode)

-- filter using the first column

SELECT ProductKey, EnglishProductName

FROM dbo.DimProduct

WHERE EnglishProductName = 'Road-750 Black, 44'

-- filter using the second column

SELECT ProductKey, EnglishProductName

FROM dbo.DimProduct

WHERE WeightUnitMeasureCode = 'G'

-- filter using the third

SELECT ProductKey, EnglishProductName

FROM dbo.DimProduct

WHERE SizeUnitMeasureCode = 'CM'

-- cleaning the code

DROP INDEX IX_DimProduct_1 ON dbo.DimProduct

This code creates an index using three columns. As you see, the SELECT statements use the columns used for the index; first SELECT uses EnglishProductName, second uses WeightUnitMeasureCode, and third uses SizeUnitMeasureCode. Have a look on query plans;

Plans clearly show that SQL Server leverages the index for all three SELECT statements. However, note the way it has been used. For the first SELECT, Index Seek has been used but Index Scan for the second and third. This means, filtering with left-most columns gets more benefits but filtering with right-most columns do not get it optimally.

See below query, filtering starts on the left-most column and two columns are used. As you see, it is “Index Seek”; Index is used optimally.

-- filter using the first and second columns

SELECT ProductKey, EnglishProductName

FROM dbo.DimProduct

WHERE EnglishProductName = 'Road-750 Black, 44'

AND WeightUnitMeasureCode = 'G'

Saturday, October 5, 2013

Indexing with SQL Server – SS SLUG Aug 2013 - Presentation

Here is the presentation used for discussing SQL Server indexing. This discusses index structures, storage patters and different implementations addressing all versions, including SQL Server 2014.

This contains 40 slides including links for demo codes. Slides include;

- SQL Server Table Structures

- SQL Server Index Structures – Rowstore indexes

- Managing Rowstore Indexes

- SQL Server Index Structures – Columnstore indexes

What is Columnstore Index - SS SLUG Aug 2013 – Demo V

If you have worked with large databases (or data warehouses), you have already seen enough of scenarios where you do not get much benefits out of indexes, particularly with large data tables. SQL Server 2012 ColumnStore index structure was specifically introduced for addressing this issue.

This new structure is based on Microsoft Vertipaq technology and it goes as a non-clustered index. The different between this and traditional indexing is the storage. The indexes we have worked so far store data in a row-wise structure where as this stores data in a column-wise structure. Another different which make this unusable with OLTP databases is, structure becomes Read-Only.

How this exactly stores data was discussed in the user group meeting. Here are set of images that explains how SQL Server organizes data for ColumnStore indexes.

The first image shows a table that contains millions of records with hundreds of columns. If you execute a command for creating a ColumnStore non-clustered index, the first step of SQL Server is grouping records (image 2). Then it creates segments for each column in each group. Third image shows how 16 segments are created. SQL Server uses Dictionary Compression for compressing these segments. These segments will be stored in LOBs and become unit of transfer between the disk and the memory.

Let’s see how it can be used and the performance benefit. This sample code uses ContosoRetailDW and it can be downloaded at: http://www.microsoft.com/en-us/download/details.aspx?displaylang=en&id=18279.

Have a look on following query. The query accesses three tables (one is very large) and performs set of aggregations.

USE [ContosoRetailDW]

GO

SET STATISTICS IO ON

SET STATISTICS TIME ON

SELECT

p.ProductName

, d.CalendarYear

, d. CalendarMonth

, COUNT(*) TotalOrders

, SUM(f.SalesQuantity) Quantity

, SUM(SalesAmount) Amount

, SUM(CASE WHEN f.DateKey = '2009-12-31 00:00:00.000' THEN SalesAmount ELSE 0 END) AmountToday

FROM dbo.FactSales f

INNER JOIN dbo.DimDate d

ON d.Datekey = f.DateKey

INNER JOIN dbo.DimProduct p

ON p.ProductKey = f.ProductKey

GROUP BY

p.ProductName

, d.CalendarYear

, d. CalendarMonth

ORDER BY 1, 2, 3

SET STATISTICS IO OFF

SET STATISTICS TIME OFF

It has read around 19,000 pages and has taken 40 seconds.

Now, let’s create a ColumnStore index on the table. The recommended way is, include all columns when creating the index.

CREATE COLUMNSTORE INDEX IX_FactSales_CStore ON dbo.FactSales (

SalesKey, DateKey, channelKey, StoreKey, ProductKey, PromotionKey, CurrencyKey

, UnitCost, UnitPrice, SalesQuantity, ReturnQuantity, ReturnAmount, DiscountQuantity

, DiscountAmount, TotalCost, SalesAmount,ETLLoadID, LoadDate, UpdateDate)

This might takes minutes based on the number of records in the table. Once it is created, run the SELECT again and see the result.

As you see, number of pages that have been read is around 8,000 and time taken is 2 seconds. It is a significant improvement. However the biggest issue with this is, now table has become a read-only table. However this can be applied in partition level and partitions that contain history data can be benefited from this.

This link: http://technet.microsoft.com/en-us/library/gg492088.aspx gives all info regarding ColumnStore indexes. Have a look on it for understanding restrictions, limitations and scenarios you can use it.

Tuesday, August 27, 2013

Understanding Index INCLUDED COLUMNS - SS SLUG Aug 2013 – Demo III

Indexes are added mostly based on WHERE conditions used with queries execute frequently. Once the index is added, it speeds up the search on conditions added but does not improve the speed of loading values related to non-indexed columns. Adding non-indexed columns to the index and making all queries as part of the index is considered as Covering Index Pattern. However this post is not about Covering Index Pattern but something similar to it, called as Included Columns Pattern.

This was discussed in August user group meeting. This code is related to one of demos.

For testing purposes, I have used one of Microsoft sample databases which has millions of records. It is called ContosoRetailDW and it can be downloaded at: http://www.microsoft.com/en-us/download/details.aspx?displaylang=en&id=18279

Let’s create a new table using one of existing tables and add a clustered index on it.

USE ContosoRetailDW

GO

SELECT *

INTO dbo.FactSalesNew

FROM dbo.FactSales

CREATE UNIQUE CLUSTERED INDEX IX_FactSalesNew ON dbo.FactSalesNew (SalesKey)

GO

Enable “Actual Execution Plan” and run the query below.

SET STATISTICS IO ON

SELECT SalesKey, SalesAmount, ReturnAmount, DiscountAmount

FROM dbo.FactSalesNew f

INNER JOIN dbo.DimDate d

ON d.Datekey = f.DateKey

AND CalendarYear = 2007

WHERE ReturnAmount BETWEEN 1000 AND 1500

SET STATISTICS IO OFF

Have a look on pages read for loading the resultset into memory and have a look on execution plan.

This table has 3 million records and they are held using 52491 pages. As you see with the first result, SQL Server reads all pages for searching records (Clustered Index Scan) and returns only 2061 records. Let’s try to decrease the number of pages to be read by adding an index on ReturnAmount column.

CREATE INDEX IX_FactSalesNew_ReturnAmount ON dbo.FactSalesNew (ReturnAmount)

Once added, run the same query and see the result.

SQL Server uses the index added and number of pages read is 19930 now. It has improved the overall operation. However, can we improve little bit more? Yes, it is possible with included columns. You notice with the plan that even though there is an index, SQL Server still reads the clustered index because all other columns except RetuenAmount are not exist with the index. What if we include other columns into same index? See the below query.

DROP INDEX IX_FactSalesNew_ReturnAmount ON dbo.FactSalesNew

GO

CREATE INDEX IX_FactSalesNew_ReturnAmount ON dbo.FactSalesNew (ReturnAmount)

INCLUDE (DateKey, SalesAmount, DiscountAmount)

As you see, other columns used in the query are added to index now using INCLUDE clause. Remember, they have not been added as key columns, hence they cannot be used for searching. If a query is executed with “WHERE SalesAmount BETWEEN 1000 AND 1500”, SQL Server cannot use the index for searching. This is the key difference between Covering Index Pattern and Included Columns Patter. See the result of the SELECT query now.

Less number of pages to be read and no need to go through the clustered index. In this case, improvement is very high, however everything has pros and cons. Since additional columns are maintained in the index, remember that cost is involved with this when updating records.

Sunday, August 25, 2013

Using GUID column as Clustered Index Key– SS SLUG Aug 2013 – Demo II

GUIDs are commonly used in distributed applications which require “uniqueness” across the entire world. Unfortunately I have seen the usage of GUIDs with clustered keys in non-distributed applications, where global uniqueness is not required. This was discussed in my presentation and showed how useful the GUIDs as clustered key as well as how it makes the index fragmented. This post is for the demo code related to the discussion.

Before going through the code, we must understand that GUIDs are not as bad as we think if it is managed well. You can make an uniqueidentifier column as PRIMARY KEY, as clustered key. Although it does not as efficient as int data type, it gives moderate efficiency. Let’s look at how this makes the clustered index fragmented and how it can be avoided.

USE tempdb

GO

-- create a table with uniqueidentifier

-- and make it as the clustered key

IF OBJECT_ID('dbo.GUID_Table') IS NOT NULL

DROP TABLE dbo.GUID_Table

GO

CREATE TABLE dbo.GUID_Table

(

Id uniqueidentifier PRIMARY KEY

, name char(2000)

)

GO

-- insert 100 records with default values

INSERT INTO dbo.GUID_Table

VALUES

(NEWID(), 'a')

GO 100

SELECT * FROM sys.dm_db_index_physical_stats (

DB_ID(), OBJECT_ID('dbo.GUID_Table'), NULL, NULL, 'DETAILED')

Once the last statement is run, you will see how fragmented your table is. For more info on fragmentation, please refer: http://dinesql.blogspot.com/2013/08/understanding-index-fragmentation-ss.html.

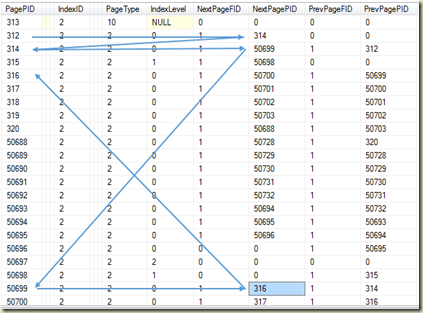

Both external fragmentation (97%) and internal fragmentation (54%) are very high. The reason is, page splits and records movement during insertions. Since GUIDs are not sequentially generated, record placement in pages is always an issue for SQL Server. What happen is, when a GUID is to be inserted as the key (entire record in this case), it looks for the page which record needs to be placed, and if no space in the page, it splits the page, moving 50% of records in the page to a new page, breaking the order of the pages which are ordered based on keys. Run the code below for seeing the linkage between pages.

DBCC IND (tempdb, [GUID_Table], -1)

As you see, SQL Server has to do many “read-back” for reading data sequentially, making all queries slowing down. The only way to avoid this with GUIDs is, use NEWSEQUENTIALID instead of NEWID. It generates GUIDs that are sequential to the last generated GUID. If Insertion is made using NEWSEQUENTIALID, external fragmentation will be lesser because of its sequential order on generation. Re-create the table and run the INSERT statement as below;

-- create table again

DROP TABLE dbo.GUID_Table

GO

CREATE TABLE dbo.GUID_Table

(

Id uniqueidentifier PRIMARY KEY DEFAULT (NEWSEQUENTIALID())

, name char(2000)

)

INSERT INTO dbo.GUID_Table

VALUES

(DEFAULT, 'a')

GO 100

SELECT * FROM sys.dm_db_index_physical_stats (

DB_ID('tempdb'), OBJECT_ID('GUID_Table'), NULL, NULL, 'DETAILED')

DBCC IND (tempdb, [GUID_Table], -1)

If you analyze PagePID and NextPagePID in DBCC IND result-set now, you will see how pages are ordered and no “read-backward” is needed. And the SELECT statement proves that no fragmentation has happened too. This clearly shows that with NEWSEQUENTIALID, split is not required as the value generated is always greater than the value exist. There are two key things not remember on it;

-

NEWSEQUENTIALID always generates a higher value greater than the one generated before by same server.

-

The uniqueness is limited to the server used only. Duplication can happen if values are generated with two servers.

Saturday, August 24, 2013

Understanding Index Fragmentation – SS SLUG Aug 2013 – Demo I

Index fragmentation is something we need to monitor frequently specifically on databases that are heavily indexed. If indexes added are fragmented, you will not get the expected performance from indexes. This is one of the areas discussed with last user group meeting, here is the sample codes that shows how indexes are getting fragmented.

Run below code to create a sample table with values.

USE tempdb

GO

-- creating test table

IF OBJECT_ID(N'dbo.TestTable', 'U') IS NOT NULL

BEGIN

DROP TABLE dbo.TestTable

END

GO

CREATE TABLE TestTable (Id int IDENTITY(1,1) PRIMARY KEY, [Value] varchar(900))

GO

-- Inserting sample values

DECLARE @a int, @b int

SET @a = 65

WHILE (@a < 91)

BEGIN

SET @b = 0

WHILE (@b < 20)

BEGIN

INSERT INTO TestTable ([Value])

SELECT REPLICATE(CHAR(@a), 445) + CONVERT(VARCHAR(10), @b)

SET @b = @b + 1

END

SET @a = @a + 2

END

GO

-- See the values inserted, [Value] column contains

-- values like AAAA..., CCCC..., EEEE..., etc

SELECT * FROM dbo.TestTable ORDER BY Id

-- making an index on [Value] column

CREATE INDEX IX_TestTable ON dbo.TestTable([Value])

GO

-- checking for internal fragmentation

SELECT * FROM sys.dm_db_index_physical_stats (DB_ID(), OBJECT_ID(N'dbo.TestTable', N'U'), 2, NULL, 'DETAILED')

The last statement which is DMF used to check the fragementation of indexes. Two columns used for checking the fragmentation, avg_fragmentation_in_percent for External fragmentation and avg_page_space_used_in_percent for Internal fragmentation.

Here are few points discussed regarding fragmentation;

- Internal fragmentation

Inefficient use of pages within an index because the amount of data stored within each page is less than the data page can contain.- <= 30% reorganize index

- > 30% rebuild index

- External fragmentation (Logical and Extent)

Inefficient use of pages within an index because the logical order of the page is wrong.- <= 75% and >= 60% reorganize index

- < 60% rebuild index

As per result, we do not need to worry much on fragmentation. SQL Server uses 16 pages for holding 260 records (averagely 16 records per page). For testing purposes, let’s fragment the index . Here is the code;

-- reducing the zize of the index key for some records

UPDATE dbo.TestTable

SET [Value] = LEFT([Value], 1)

WHERE Id % 2 = 0

-- checking for internal fragmentation

SELECT * FROM sys.dm_db_index_physical_stats (DB_ID(), OBJECT_ID(N'dbo.TestTable', N'U'), 2, NULL, 'DETAILED')

And the result is;

As you see, since we removed some characters from Value column, pages have space now, only 47% of space has been used. You really do not need 16 pages for holding 260 records now but SQL Server still holds records in 260 pages which is not efficient, making more IO and using more memory for your queries, decreasing the performance of them. Rebuilding the index sorts this out;

-- removing the fragmentation

ALTER INDEX IX_TestTable ON dbo.TestTable REBUILD

What you have to remember is, update like above makes your indexes fragmented, hence try to avoid such updates, if performed, make sure you check for fragmentation and defragment if required.

Let’s start from the beginning for understanding External fragmentation. It is all about breaking the order of the records. Run the below code for understanding the current order. Make sure you run the first code segment above (table creation and inserting)again before running this.

-- checking for external fragmentation

SELECT * FROM sys.dm_db_index_physical_stats (DB_ID(), OBJECT_ID(N'dbo.TestTable', N'U'),2, NULL, 'DETAILED')

-- run this undocumented command to see the NextPageID

DBCC IND (tempdb, 'TestTable', 2)

Here are the results;

Look at the first result, it says that External fragmentation of the clustered index is 18.5% which is okay. Next result is from DBCC IND command (For more info on DBCC IND, refer: http://dinesql.blogspot.com/2013/08/dbcc-ind.html) shows how pages are linked. Focus on PagePID and NextPagePID columns, and PageType column. Page type 2 represents index pages. Index level 0 represents leaf pages. This says that next page of 312is 314 and next page of 314 is 316, which is a perfect order.

Let’s make the table externally fragmented. Run the code below;

-- inserting new values such as

-- Bs, Ds, Fs, etc....

-- Making page-splits

DECLARE @a INT

SET @a = 66

WHILE (@a < 86)

BEGIN

INSERT INTO TestTable ([Value])

SELECT REPLICATE(CHAR(@a), 445) + CONVERT(VARCHAR(10), @a)

SET @a = @a + 2

END

GO

When records are inserted in between existing values (B has to be inserted between A and C), SQL Server needs to check and see the space availability of the correct page. If no space available, page-split occurs and 50% of the records are moved to a new page. This breaks the order of pages. Run the sys.dm_db_index_physical_stats and DBCC IND again and see;

We can clearly see what has happened to the order of pages, it is broken, it is fragmented. When you query data from this table (for example: data from pages 312, 341, 50699, 316), it has to read forward (which is fine) and read back (which is very costly) making more IOs and slowing down the generation of the resultset. If you rebuild the index, this can be fixed, however what you have to remember is, not to make your indexes externally fragmented from your actions, making query performance very poor. Here are some points you need to consider when dealing with index keys. These reduce external fragmentation.

-

Select a non-volatile columns for the keys.

-

Make sure column values are increasing values.

Presentation related to this demo is available at: SQL Server Universe.

DBCC IND

DBCC IND command is one of the important commands used when indexes are analyzed. Specifically this can be used for seeing the linkage between pages associated with a given table. It requires three parameters;

DBCC IND ( {‘db_name’ | ‘db_id’}, {‘table_name’ | ‘table_id’}, {‘index_name’ | ‘index_id’ | –1}

- db_name | ‘db_id – requires database name or id. If 0 or ‘’ passed, current database will be used.

- table_name | table_id – requires table name or object id of the table.

- index_name | index_id | –1 – requires index id of the table. If –1 is used, result is generated for all the indexes.

Here is brief on output of IND command.

| PageFID | File number where the page is located |

| PagePID | Page number for the page |

| IAMFID | File ID where the IAM page is located |

| IAMPID | Page ID for the page in the data file |

| ObjectID | Object ID for the associated table |

| IndexID | Index ID associated with the heap or index |

| PartitionNumber | Partition number for the heap or index |

| PartitionID | Partition ID for the heap or index |

| iam_chain_type | he type of IAM chain the extent is used for. Values can be in-row data, LOB data, and |

| PageType | Number identifying the page type; 1 - Data page |

| IndexLevel | Level at which the page exists in the page organizational structure. The levels are |

| NextPageFID | File number where the next page at the index level is located |

| NextPagePID | Page number for the next page at the index level |

| PrevPageFID | File number where the previous page at the index level is located |

| PrevPagePID | Page number for the previous page at the index level |

Here is an example for running the command;

DBCC IND (tempdb, 'TestTable', 2)