The cumulative update #3 for SQL Server 2012 is available for downloading now at: http://support.microsoft.com/kb/2723749. Make sure you apply this to test environment first and check before applying to live environment.

Thursday, August 30, 2012

Sunday, January 22, 2012

TABLE Type and MERGE Statement

As I get many offline questions regarding the presentation “Reducing RoundTrips” I did, thought to summarize the content we discussed as a post. Session spoke about how to reduce multiple database calls from client to database application when an updated data set (Ex. data table bound to a grid) is required to send from client application to database. In addition to that we discussed the way of handling all three operations: INSERT, UPDATE, and DELETE using a single statement. This is what the session focused on;

MERGE

MERGE statement is used to insert data that does not exist but to update if it does exist. It is an extension of UPSERT which is a known term in other database management systems for performing both UPDATE and INSERT statement using a single technique. The main benefit of this is the facility to manipulate many number of records in a single table using a source as an atomic operation. This eliminates individual statements for INSERT, UPDATE and DELETE operations, one statement which is MERGE handles all three operations. MERGE statement has following elements;

- Target: This the table or view (destination) which needs to be updated.

- Source: This contains updated data (modified, newly added) and will be used for updating the target. This can be a table, view, derived table, CTE, or table function.

- WHEN MATCHED [AND] ...... THEN: This is where the action which needs to be performed when the row in the source is found in the target, should be written. Two WHEN MATCHED clauses are allowed, limiting one for UPDATE as an action and other for DELETE as an action.

- WHEN NOT MATCHED [BY TARGET] ...... THEN: The action which performs when the row in the source is not found in the target, has to be written with this. It is usually the INSERT action.

- WHEN NOT MATCHED [BY SOURCE] ...... THEN: This is to perform an action when the row in the target is not supplied with the source. Usually it is DELETE.

- OUTPUT clause and $Action: The standard inserted and deleted virtual tables are available with MERGE. In addition to that $Action provides the operation performed; INSERT, UPDATE, DELETE. This is normally used for logging/auditing.

In order to use MERGE, a source must be defined and filled. Usually the source is defined and filled by the client but for various limitations and less facilities, we used to iterate the filled-source at the client site itself and send one row at a time to database, which makes many calls to database for updating all records in the source.

TABLE Type

This can be overcome using TABLE data type. TABLE data type can be created as a user-defined data type definition and can be used as either variable or parameter. Following are the key elements of creating a type;

- CREATE TYPE: This is used for creating the user defined type. It has to be created as a TABLE and structure has to be defined.

- READONLY keyword: If the type is used as a parameter, READONLY keyword has to be used. It is as an OUTPUT parameter is not supported.

- SqlDbType.Structured: When a data table from a .NET application is sent, parameter data type must be declared as System.Data.SqlDbType.Structured.

Here is a sample code for all;

This code creates a table called Customer and inserts 4 rows. In addition to that it creates a log table too, for recording operations.

USE tempdb

GO

-- create a table for customer

CREATE TABLE dbo.Customer

(

CustomerId int identity(1,1) not null

, CustomerCode char(4) not null

, FirstName varchar(25) null

, LastName varchar(25) not null

, CreditLimit money not null

, IsLocal bit not null

, LastModified smalldatetime not null

)

GO

-- insert four records

INSERT INTO Customer

(CustomerCode, FirstName, LastName, CreditLimit, IsLocal, LastModified)

VALUES

('C001', 'Dinesh', 'Priyankara', 10000, 1, getdate())

, ('C002', 'Jane', 'K', 10000, 1, getdate())

, ('C003', 'Martinie', 'Ku', 10000, 0, getdate())

, ('C004', 'Joe', 'Anderson', 10000, 0, getdate())

GO

CREATE TABLE dbo.CustomerLog

(

CustomerCode char(4) not null

, DateModified datetime not null

, Action varchar(15) not null

)

GO

Here is the stored procedure for accepting an updated data set using TABLE type and updating Customer using MERGE.

-- create a stored procedure for accepting created TABLE type as a parameter

-- note that READONLY has to be used

CREATE PROC dbo.InsertCustomer @CustomerType dbo.CustomerType READONLY

AS

BEGIN

INSERT INTO dbo.CustomerLog

(CustomerCode, DateModified, [Action])

SELECT o.CustomerCode, getdate(), o.[Action]

FROM

(MERGE INTO dbo.Customer AS c

USING @CustomerType AS t

ON c.CustomerCode = t.CustomerCode

WHEN MATCHED AND t.IsLocal = 0 THEN

UPDATE SET c.FirstName = t. FirstName

, c.LastName = t.LastName

, c.CreditLimit = t.CreditLimit

, c.LastModified = getdate()

WHEN NOT MATCHED THEN

INSERT (CustomerCode, FirstName, LastName, CreditLimit, IsLocal, LastModified)

VALUES

(t.CustomerCode, t.FirstName, t.LastName, t.CreditLimit, t.IsLocal, getdate())

WHEN NOT MATCHED BY SOURCE THEN

DELETE

OUTPUT isnull(inserted.CustomerCode, deleted.CustomerCode) as CustomerCode, $ACTION AS Action) AS o

END

GO

Everything is ready from database level. Here is a sample code for .NET application.

SqlConnection connection = new SqlConnection(@"Server=.\SQL2008R2;Integrated Security=SSPI;Database=tempdb");

DataTable customer = new DataTable("Customer");

customer.Columns.Add("CustomerCode", typeof(string));

customer.Columns.Add("FirstName", typeof(string));

customer.Columns.Add("LastName", typeof(string));

customer.Columns.Add("CreditLimit", typeof(decimal));

customer.Columns.Add("IsLocal", typeof(bool));

customer.Rows.Add("C001", "Dinesh", "Priyankara", 10000, true); // no change and no update

customer.Rows.Add("C002", "Jane", "Kani", 25000, true); // should not update

customer.Rows.Add("C004", "Joe", "Andrew", 35000, true); // update all

customer.Rows.Add("C005", "Kate", "Neo", 35000, true); // new record

SqlCommand command = new SqlCommand("InsertCustomer", connection);

command.CommandType = CommandType.StoredProcedure;

SqlParameter parameter = new SqlParameter("@CustomerType", System.Data.SqlDbType.Structured);

parameter.Value = customer;

command.Parameters.Add(parameter);

connection.Open();

command.ExecuteNonQuery();

connection.Close();

Enjoy!

Tuesday, November 1, 2011

Is SQL Server @@IDENTITY a Global Function?

The @@IDENTITY is a system function that returns last generated identity value. But the question is, is it the last identity generated by my code? Last generated by my session? Or last generated by the system for any user?

Understanding what exactly it returns help you to code your database modules accurately. Let’s try this with a small example.

CREATE TABLE T1 (Id int IDENTITY(1,1), Value varchar(20))

CREATE TABLE T2 (Id int IDENTITY(100,1), Value varchar(20))

GO

CREATE PROC InsertT2

AS

BEGIN

INSERT INTO T2 (Value) VALUES ('Test')

END

GO

CREATE PROC InsertT1

AS

BEGIN

INSERT INTO T1 (Value) VALUES ('Test')

EXEC InsertT2

SELECT @@IDENTITY

SELECT SCOPE_IDENTITY()

END

GO

EXEC InsertT1

The result of InsertT1 execution will 100 and 1. As you see in the result, @@IDENTITY returns the last generated identity value for my session, not for the scope I am in. If you use this function to get the last generated identity value for your insert, result of it may be wrong unless no other code is executed that inserts a record to another table with IDENTITY property enabled. Note that SCOPE_IDENTITY returns the identity value for the scope, hence if the requirement is for get the value generated for current scope, use it.

There is another function that can be used for getting generated identity value, which is called IDENT_CURRENT. This function accepts the table name as a parameter and returns the last generated identity value for given table, by any scope, any session, any connection.

Monday, September 19, 2011

Reporting Services Parameters: Adding “All” item.

There are some instances we have to create parameters with multiple items, including an item representing all items in the list, mostly called as “All”. There are various ways of doing it. If the list is dynamic and data source is OLAP, you get this item automatically. If it is not OLAP, and loading from OLTP database, this item has to be manually added and programmatically handled.

Here is an easy way to handle it. Have this additional item in a new query and use UNION for joining it to main query.

SELECT 'All Years' AS OrderYear

, 0 AS OrderYearValue

UNION

SELECT DISTINCT

CONVERT(varchar(100), YEAR(OrderDate)) AS OrderYear

, YEAR(OrderDate) as OrderYearValue

FROM Sales.SalesOrderHeader

ORDER BY 2

Now data source contains the item. In order to get this handled with query parameter, follow below code;

SELECT {your columns}

FROM Sales.SalesOrderHeader

WHERE YEAR(OrderDate) = @Year

OR @Year = 0

Happy Coding .

Wednesday, August 31, 2011

PIVOT and UNPIVOT: Integration Services

As you all know, Pivoting and Unpivoting are supported with SSIS but the implementation of PIVOTing is bit difficult when compared with UNPIVOTing. This post explains the implementation of both PIVOT and UNPIVOT transformations that come with SSIS with all possible explanations.

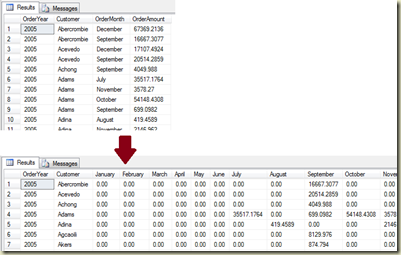

Pivot allows us to convert normalized dataset into less normalized format, transferring (pivoting) row values into columns. In simple term, it identifies distinct values in a designated column and makes columns based on identified values (note that it is not fully dynamic and automated). Here is an example.

Example shows how PIVOT works, you can see that values (months) in OrderMonth column have been converted into columns, combining with OrderAmount column. Now let’s see how we can do this with SSIS. The code below is the source for pivoting that returns Orders placed by customers for months and years.

1: SELECT

2: YEAR(OrderDate) OrderYear

3: , p.LastName Customer

4: , DATENAME(mm, OrderDate) OrderMonth

5: , SUM(SubTotal) OrderAmount

6: FROM Sales.SalesOrderHeader h

7: INNER JOIN Sales.Customer c

8: ON c.CustomerID = h.CustomerID

9: INNER JOIN Person.Person p

10: ON p.BusinessEntityID = c.PersonID

11: GROUP BY

12: YEAR(OrderDate)

13: ,p.LastName

14: ,DATENAME(mm, OrderDate)

15: ORDER BY 1, 2

In order to do this in SSIS, create a new project and have a Data Flow Task in Control Flow. Place an OLE DB Data Source in Data Flow Task and set the above query, making the connection to AdventureWorks2008R2 database. Then, drag PIVOT transformation on to Data Flow and connect them using Connection Line.

Pivot configuration is little bit tricky, not as easy as T-SQL PIVOT. When you open the Editor of it, you get three tabs. Second tab allows you to marks columns for pivoting, selecting them from input flow.

Next tab which is Input and Output Properties, is for instructing SSIS the way of using input columns for pivot operation. The Pivot Default Input node in left pane allows us to set the usage of input columns for pivoting. The Pivot Default Output allows us to form the output columns based on input columns and their usage. Let’s first have a look on Pivot Default Input node.

If you expand the next level of Pivot Default Input, which is Input Columns, you can see all columns available for the operation. Selecting one column loads its properties to right pane, which contains a property called PivotUsage that accepts a numeric value from 0 to 3. Here is the way of deciding the value for PivotUsage property.

| Value | Meaning |

| 0 | Column does not participate in PIVOTing, it is passed through unchanged to the output. If PIVOTing identifies multiple values in an output row, only the first value of them will be used with the output row.

In our example, we do not have any column to be marked as 0. |

| 1 | Column is the key (Set key) for generating the pivot output rows. Values in this column will be used to identify unique values for rows. We can mark one or more columns as 1 but need to make sure that combination of them make record unique without providing any duplicates.

In our example, we have to column to mark as 1: OrderYear and Customer. |

| 2 | Values in this column (Pivot Column) will be used for creating columns in the pivot output.

In our example, OrderMonth is the column which should be marked as 2. |

| 3 | Values in this column will be used to generate the values for columns created from pivot column.

In our example, OrderAmount should be set as 3. |

Here is the way of setting it;

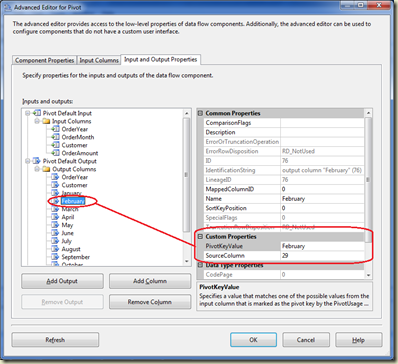

Next step is configuring Pivot Default Output. If you expand the node, Output Columns node is appeared. In order to defines columns, including columns created through pivot column, we have to create them. Make sure you have selected Output Columns node and click on Add Column for creating 14 columns. Once the columns are created, name them with Name property as OrderYear, Customer, January, February, March, April, May, June, July, August, September, October, November, and December.

Next is, linking output columns with input columns. This will be done through a property called SourceColumn in output columns. The value of the SourceColumn has to be taken from relevant input column’s property called LineageID. Find the LineageID of OrderYear input column (in my case it is 17, see above image) and set it in SourceColumn of OrderYear output column. Do the same for Customer column too.

Next columns are month columns. The property SourceColumn of all month columns has to be set with LineageID of OrderAmount. In addition to that, a property called PivotKeyValue should be filled too. This property should be filled with values coming from PivotColumn. For example, January column’s property should be filled with “January”, and February column’s property should be filled with “February”.

Package is done. Let’s send the output to an Excel file for testing purposes. Here is the package execution and the output of it.

SSIS UNPIVOT is not as tricky/difficult as PIVOT. Let’s see how UNPIVOTing can be done with SSIS. Easiest way to understand this is, reversing the process, unpivoting Excel sheet created with previous example. Let’s have a Data Flow Task and Excel Source on it, connecting to the Excel we created. Add an Unpivot transformation and connect it with the source.

Let’s configure UNPIVOT transformation. Open the Unpivot Transformation Editor and configure it as follows;

-

Select Pass Through checkbox only for OrderYear and Customer.

-

Select all month columns. This loads all months into the grid.

-

Give a destination column name (Eg. OrderAmount) for month columns. Make sure that same name is set for all columns. This column will hold values related to months.

-

Make sure Pivot Key Value has been properly set. This value will be set as a row value for column which will be unpivoted.

-

Finally, give a name for Pivot Key Value column (OrderMonth).

Done. Have a destination as you want and see the result.

Sunday, July 10, 2011

Default Logging: Microsoft SQL Server Integration Services

Everybody knows how important logging is, when it comes for troubleshooting. It applies for SSIS packages too. Unfortunately, most of developers, pay not much attention for this, and later regret for not having any records on package executions. If you have not implemented any mechanisms for logging and need to know how often the package gets executed or whether the package is started, you are not stranded. Integration Services has done the needful for you.

Integration Services has a default logging behavior. It captures starting and ending events, and adds two log entries to Windows Event Viewer. If you run the package with Business Intelligence Studio while it is being designed, you should see two entries in Windows Event Viewer as follows;

This behavior cannot be stopped. It logs not only when the package is run with BIDS, with DTEXEC too. As per my experience this is not enough for troubleshooting if it is a complex package, so, make sure you have used other out-of-the-box logging features for recoding your package processes.

Tuesday, July 5, 2011

How the language “SQL” is born?

Simple thing that you may not know ……….

Structured Query Language was born in 1970, supporting Edgar F. Codd’s Relational Database Model. It was invented at IBM by Donald D. Chamberlin and Raymond F. Boyce (who introduced 3.5 NF) for IBM’s RDBMS called System R. The initial name of it was Structured English Query Language (SEQUEL) but changed to SQL later as SEQUEL was a trademark of another company.

The first commercially available implementation of SQL was released by Relational Software Inc. (now known as Oracle Corporation). It was in June 1979, for Oracle V2. Relational Software Inc. started developing their own RDBMS based on Codd’s theories in 1970s.

Not only SQL, there were other RDBMS and SQL related languages. In 1970, University of California, Berkeley created a RDBMS named Ingres (Known as Open Source RDBMS) and QUEL was the language created for managing its data. With various different implementations, later it evolved into PostgreSQL.

IBM continued with its System R and SQL Implementation, making it as a commercial product named System38. It was in 1979. Now it has been evolved into DB2 which was released in 1983.

SQL has many extensions now. Some of them are;

- Oracle – PL/SQL

- IBM – SQL PL

- Microsoft – T-SQL

SQL was standardized by American National Standard Institute (ANSI) in 1986 as SQL-86. In 1987, it was standardized by International Organization for Standardization (ISO) too. It has been revised in many times, starting with SQL-86 to SQL:2008.

Sunday, July 3, 2011

Script Task cannot be debugged: Integration Services

If you run on 64-bit environment and try to debug a script task after placing a breakpoint, it is not going to work as you expect. The reason for this is 64-bit environment. There are few things that do not support on 64-bit mode but you can still configure them with 32-bit SSIS designer; Business Intelligence Development Studio. If you experience problems such as connecting to Excel via Microsoft OLE DB Provider for Jet or debugging Script task, while running in 64-bit mode, all you have to do is, changing the SSIS runtime into 32-bit. This is done by changing a property called Run64BitRuntime on the Debugging page.

Note that this property is used and applied only at design time. When you execute the package in a production server, environment for it is based on installed dtexec utility. The dtexec utility is available in 64-bit mode. If you execute the package in 64-bit environment, 64-bit dtexec will be automatically selected and run. If need it to be executed in 32-bit mode, use command prompt for running it. When you install 64-bit Integration Services, it installs both 32-bit and 64-bit. As command prompt uses PATH environment variable for finding directories, and 32-bit version path (C:\Program Files(x86)\....) appears before 64-bit version path, it uses 32-bit dtexec for executing packages.

If the package needs to be scheduled with SQL Agent and run in 32-bit mode, that has to be specifically instructed as SQL Agent uses 64-bit version without using PATH environment variable. It can be done by setting Use 32 bit runtime on the Execution Options of the Job Step.

Sunday, May 15, 2011

How the term “Business Intelligence” is born?

This is not about processes or usage of Business Intelligence solutions, it is about usage of this term. Unfortunately it looks like this is unknown to many (Unawareness for this is reasonable but I can remember that when a picture of Charles Babbage is shown at a session, many did not recognize or aware of him).

The term “Business Intelligence” firstly appeared in 1958. It was used by a IBM researcher, Hans Peter Luhn, in one of his articles. His definition for it was;

“The ability to apprehend the interrelationships of presented facts in such a way as to guide action towards a desired goal”

Although Hans introduced (or used) this word for processes related to business intelligence, it was not a widespread term until late 1990s. It was the time for Decision Support Systems. In 1989, Howard Dresner proposed “Business Intelligence” as an umbrella term but it looks like the real usage of the term started in 1996. Later Howard became an Analyst for Gartner Group, and it is Gartner Group (Gartner, Inc.) who labeled this on collective technology used for DSSs. This is how it was published;

“Data analysis, reporting, and query tools can help business users wade through a sea of data to synthesize valuable information from it—today these tools collectively fall into a category called ‘Business Intelligence.’”

Thursday, May 12, 2011

PerformancePoint web-parts cannot be connected (linked)

Few days back, I faced for a strange issue with PerformancePoint web-parts. It was a simple thing, all I had was, a web page created with SharePoint and couple of web-parts. Once the page is open in design mode and web-parts are placed, tried to link two web-parts (a report web-part and a filter) through a connection as below;

Funny thing was, though it allowed me to create the connection (link) and save, it did not get saved permanently. If I open the connection window again, the created connection is missing.

I could not find the issue immediately but it was a terrible thing. Thanks for my colleagues, they have found the issue. It is with Internet Explorer. If you try to do this with Internet Explorer 8.x, it is not going to work. For some of my colleagues, older versions of IE have been worked. For some, older version of FireFox has been worked. Finally what I did was, installed FireFox 3.6 and got it done. Note that it is not going to work with FireFox 4.x too.

Anyone faced this issue? Why SharePoint gives an issue with latest browsers? If you know anything on this, please share with me.

Sunday, May 1, 2011

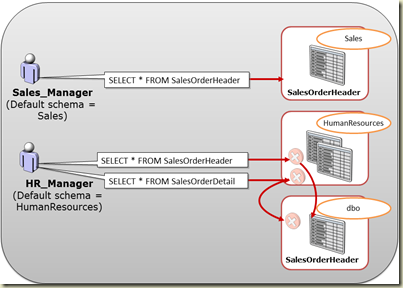

Object Name Resolution – SQL Server

Understanding how SQL Server resolves objects’ names will be an effective remedy against performance issues. This name resolution happens if securables are not fully qualified. A statement like SELECT SalesOrderID FROM SalesOrderHeader will be a candidate for this. As you see, the SalesOrderHeader is not written as a fully qualified name, hence SQL Server tries to resolve it by adding Schema owned by user connected. If SQL Server cannot find an object like ConnectedUser’sSchema.SalesOrderHeader then it tries to find an object called dbo.SalesOrderHeader as the second step. Error is thrown, if it is not successful too. Here is an example for it;

USE AdventureWorks2008

GO

-- creating a new table with dbo schema

SELECT TOP (1) *

INTO SalesOrderHeader

FROM Sales.SalesOrderHeader

-- Create two logins for testing

CREATE LOGIN HR_Manager WITH PASSWORD = '123'

, CHECK_POLICY = OFF;

CREATE LOGIN Sales_Manager WITH PASSWORD = '123'

, CHECK_POLICY = OFF;

GO

-- Adding them as users with default schemas

CREATE USER HR_Manager WITH DEFAULT_SCHEMA = HumanResources

CREATE USER Sales_Manager WITH DEFAULT_SCHEMA = Sales

GO

-- Given users permission on data reading

sp_addrolemember 'db_datareader', 'HR_Manager';

sp_addrolemember 'db_datareader', 'Sales_Manager';

-- Execute as HT_Manager and see

EXECUTE AS USER = 'HR_Manager'

GO

-- This shows records from newly created

-- table, not from original table

SELECT * FROM SalesOrderHeader

-- This throws an error

SELECT * FROM SalesOrderDetail

REVERT

GO

-- Execute as Sales_Manager

EXECUTE AS USER = 'Sales_Manager'

GO

-- Both statements will work

SELECT * FROM SalesOrderHeader

SELECT * FROM SalesOrderDetail

REVERT

GO

As you see with the code, when HR_Manager executes SELECT statements, as the first step, names of them will be resolved as HumanResources.SalesOrderHeader and HumanResources.SalesOrderDetail. SQL Server does not find any objects and do the next step, resulting dbo.SalesOrderHeader and dbo.SalesOrderDetail. A match will be found for first one as we have created one with dbo schema but not for the second. In that case, second statement throws an error.

When Sales_Manager executes, SQL Server resolves objects’ names as Sales.SalesOrderHeader and Sales.SalesOrderDetail. In that case, both statements are successfully executed.

Even though Sales_Manager executes statements without any error, it would be always better to make securables as fully qualified objects, that helps SQL Server to quickly execute the code without performing an additional task.

Saturday, April 30, 2011

Database Option: CURSOR DEFAULT {LOCAL | GLOBAL} - Is CURSOR Global?

Setting the value of this option as GLOBAL has been understood wrongly by many developers. All you have to remember is, this “global” does not refer all connections, it refers the current connection. If either database option or at the declaration of cursor, GLOBAL is set, it means that scope of the cursor is global to the connection. Simply it can be referenced in any stored procedure or batch executed by same connection. If LOCAL is used, it is only for the batch which it was created. See the code below;

-- Parent SP with a cursor

CREATE PROC TestCursorParentSP

AS

BEGIN

DECLARE @SalesOrderID int

DECLARE cur CURSOR GLOBAL

FOR

SELECT SalesOrderID

FROM Sales.SalesOrderHeader

OPEN cur

FETCH NEXT FROM cur INTO @SalesOrderID

SELECT @SalesOrderID

EXEC TestCursorChildSP

CLOSE cur

DEALLOCATE cur

END

-- Child SP that accesses cursor declared by parent

CREATE PROC TestCursorChildSP

AS

BEGIN

DECLARE @SalesOrderID int

FETCH NEXT FROM cur INTO @SalesOrderID

SELECT @SalesOrderID

END

-- Executing parent sp

-- Get values from both SPs

EXEC TestCursorParentSP

If the cursor is declared as LOCAL, child SP will not be able to access it.

Tuesday, April 26, 2011

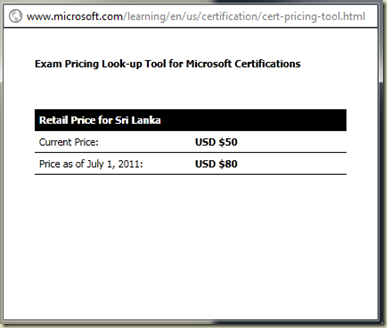

New Prices for Microsoft Certifications Exams

If you plan for doing MS exams, better hurry up. Following certifications are slated for a price increase:

-

Microsoft Certified Technology Specialist (MCTS)

-

Microsoft Certified IT Professional (MCITP)

-

Microsoft Certified Professional Developer (MCPD)

-

Microsoft Certified Desktop Support Technician (MCDST)

-

Microsoft Certified Systems Administrator (MCSA)

-

Microsoft Certified Systems Engineer (MCSE)

-

Microsoft Certified Application Developer (MCAD)

-

Microsoft Certified Solution Developer (MCSD)

-

Microsoft Certified Database Administrator (MCDBA)

See more detail:

http://www.microsoft.com/learning/en/us/certification/cert-pricing-FAQ.aspx

Sunday, April 24, 2011

TOP WITH TIES: Have you shown your TOP 10 Customers properly?

Think about a scenario where you have to show your top 10 customers based on their purchases. If the record set is as below;

and if you show first 10 records using TOP 10, have you shown all best customers? See the 11th record, it is same as 10th order, hence he should be added to top-10 list. In this case, top-10 list becomes top-11 list. But we cannot change the query as TOP 11 because it is not always guaranteed that 11th record is same as 10th record. In that case, what would be the way?

The solution is, TOP 10 WITH TIES. It adds additional rows to the resultset if any tied rows for 10th are there. See the code below;

Note that WITH TIES can be only used with SELECT statement and ORDER BY must be used too.