Fact table in data warehouses are always partitioned and with most of solutions, it is partitioned on a date key. Generally, we use SSIS for loading dimensions and fact tables and ETLs written for loading the Fact Table can be optimized by loading data into another table and switching it to an empty partition of the fact table. This is one of the best practices in data warehousing data loading and technique for doing is not a complex task.

Assume you have a Fact Table that is partitioned by moths, example, one partition for 201601, another for 201602, and so on, and data is loaded till July 2016 (201607). Then when we load August data, we can load the data into a new table that has the same structure and then switch that table in to 201608 partition in the fact table for optimizing the data loading and minimizing the impact to the fact table. The below diagram shows it;

Here is the way of doing it. I will take the same Fact Table created with this post: SSIS - Fact Loading with Dimension Type II and Dimension Type I. You can use the same code for creating dimension tables and fact tables for trying out this. In that post, I have loaded data related to June and July 2016. Let's see how we can load August data using the technique discussed in this post.

1. Let's take this data set for loading August data.

SELECT CONVERT(date, '2016-08-01') As TransactionDate, 22 AS ProductId, 100 AS CustomerId, 200 AS EmployeeId, 500 AS SalesAmount, 2 AS SalesQuantity UNION ALL SELECT CONVERT(date, '2016-08-12') As TransactionDate, 23 AS ProductId, 101 AS CustomerId, 200 AS EmployeeId, 500 AS SalesAmount, 2 AS SalesQuantity UNION ALL SELECT CONVERT(date, '2016-08-14') As TransactionDate, 23 AS ProductId, 100 AS CustomerId, 201 AS EmployeeId, 500 AS SalesAmount, 2 AS SalesQuantity UNION ALL SELECT CONVERT(date, '2016-08-22') As TransactionDate, 22 AS ProductId, 100 AS CustomerId, 201 AS EmployeeId, 500 AS SalesAmount, 2 AS SalesQuantity

2. We need a different table with the same structure for loading processed data. The below code creates another table for holding data temporarily. Note that it has same columns and it is partitioned same as FactSales table.

CREATE TABLE FactSalesTemp ( DateKey int NOT NULL INDEX IX_FactSalesTemp CLUSTERED, ProductKey int NOT NULL, CustomerKey int NOT NULL, EmployeeKey int NOT NULL, SalesAmount money NOT NULL, SalesQuantity smallint NOT NULL ) ON ps_SalesDate (DateKey) GO

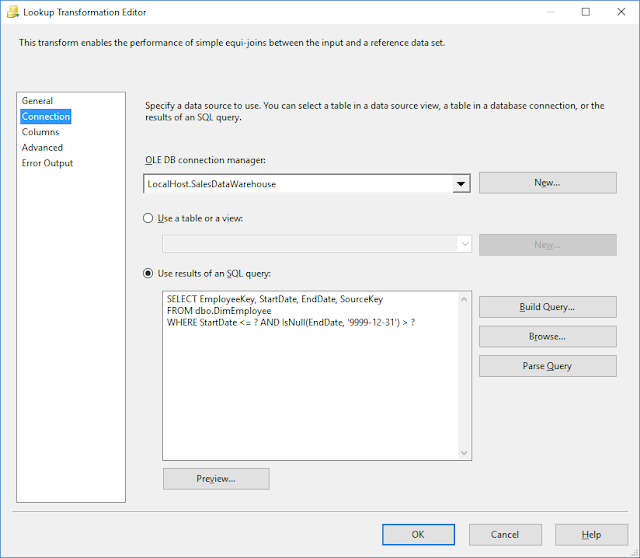

3. I will be using the same SSIS package used with my previous post. As you see, now the destination is set to newly created table which is FactSalesTemp.

4. Before loading data into new table, let's add another partition to both table for August data. All we need to do is, add a file group to the scheme and boundary value to the function;

-- Add another file group to the scheme ALTER PARTITION SCHEME ps_SalesDate NEXT USED [PRIMARY] GO -- splitting the last partition by adding another boundary value ALTER PARTITION FUNCTION pf_SalesDate () SPLIT RANGE (20160801) GO

5. Now we can execute the SSIS package and load data into newly created table. Once the data set is loaded into FactSalesTemp, we can check both tables and how partitions are filled.

How partitions are filled;

How tables are filled;

6. As you see, data is loaded into newly created table and they are in partition 9. Now we need to switch the new table into FactSales 9th partition. Here is the way of doing it.

7. Now if you check the records in the table, you will see the FactSales is loaded with new data, and it is loaded to the correct partition.

How partitions are filled;

How tables are filled;

6. As you see, data is loaded into newly created table and they are in partition 9. Now we need to switch the new table into FactSales 9th partition. Here is the way of doing it.

ALTER TABLE dbo.FactSalesTemp SWITCH PARTITION 9 TO dbo.FactSales PARTITION 9;

7. Now if you check the records in the table, you will see the FactSales is loaded with new data, and it is loaded to the correct partition.

You can have Altering scheme and function in the Control Flow as a Execute SQL Task before the Data Flow Task added. And, you can have Switching partition with another Execute SQL Task just after the Data Flow Task for completing the SSIS package.